** Welcome

*** { @unit = “”, @title = “The R Toolkit”, @lecture, @foldout }

Introducing R

The R Toolkit

In this course we cover the foundations of data programming with the R language. In order to create robust and dynamic analysis we need to use a couple of tools that were built to leverage the power of R and create compelling narratives. R Studio helps you manage projects by organizing files, scripts, packages and output. Markdown is a simple formatting convention that allows you to create publication-quality documents. And R Markdown is a specific version of Markdown that allows you to combine text and code to create data-driven documents.

R Markdown

Getting Started with R Markdown

You will have plenty of practice with these tools this semester. You will submit your labs as knitted R Markdown (RMD) files.

*** { @unit = “Aug 23”, @title = “Your Course Prep Checklist”, @assignment, @foldout }

- Read the Syllabus

- Install R and R Studio Desktop

- Sign-Up for a GitHub Account

- Fill out the doodle poll for review session times

- Introduce Yourself on YellowDig (see below)

*** { @unit = “Aug 23”, @title = “Introduce Yourself”, @assignment, @foldout }

Introduce yourself to the class

We will be using a discussion board called YellowDig for this course. Introduce yourself to the class and share a bit about:

- A little about yourself

- Your previous experience with data analytics

- One thing you hope to do with your new skills in data analytics

*** { @unit = “”, @title = “ORIENTATION”, @lecture, @foldout }

Welcome !

R is a foundational tool within a toolkit that I will refer to as the “data science ecosystem”.

If you were not able to make either Zoom session, I did a brief introduction to the “ecosystem” - the community of people that are creating cool analytical tools and building tutorials and case studies for how they might be applied, as well as a core set of tools that are all designed to work nicely together in order to implement projects.

You can think of R, R Studio, and Markdown kindof like Excel (analysis), Word (report-writing), and Power Point (presentations). R allows you to analyze your data, but these results are not useful unless you can share them with others. Here is where data-driven documents developed using R Studio and Markdown really shine. You can quickly package your R code as cool reports, websites, presentations, or dashboards to format the information in whatever way is most accessible and useful for your clients or stakeholders.

Getting Help

Nerds are stereotypically perceived as being hermetic, but in reality they have just created their own universes and civilizations. Surprisingly coding is a very social activity, and real-world analytics projects are almost always collaborative. You will learn how to use discussion boards to accelerate learning and facilitate collaboration, and social coding tools like GitHub to manage large data project.

We are going to throw a lot at you, but also provide a lot of support. Over these first couple of weeks feel free to reach out for anything you might need.

If you find something confusing let me know (likely others will as well).

- We can jump on a Zoom call to do a screen share if you want to walk through anything.

- You can post a question to the homework discussion board for data science I or program evaluation I.

- And you can join review sessions each week.

Reach out if you have questions or feel stuck!

References

Course Overview Powerpoint for Zoom Call

The course shells for CPP 523 and CPP 526 are located at:

https://ds4ps.org/cpp-526-fall-2019/

https://ds4ps.org/cpp-523-fall-2019/

The unofficial program website can be found at:

https://ds4ps.org/ms-prog-eval-data-analytics/

And the dashboard example in R can be found here:

** Week 1 - Functions and Vectors

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

This section introduces functions and vectors, two important building blocks of data programming.

Learning Objectives

Once you have completed this section you will be able to

- create new objects in R

- use functions

- summarize vectors by type

- numeric vectors

- character vectors

- logical vectors

Assigned Reading

Required:

Background Chapters:

You will start simple and get practice with these tools on labs. Skim these, then return for reference as you get stuck or want to deepend your knowledge.

Lab

Lab-01 covers the following topics:

- R Markdown (template provided)

- Vectors

- numeric

- character

- factor

- logical

Functions

names() # variable names

head() # preview dataset

$ operator # reference a vector inside a dataset

length() # vector dimensions

dim(), nrow(), ncol() # dataset dimensions

sum(), summary() # summarize numeric vectors

table() # summarize factors / character vectors

Data:

Syracuse tax parcels: [ documentation ]

URL <- "https://raw.githubusercontent.com/DS4PS/Data-Science-Class/master/DATA/syr_parcels.csv"

dat <- read.csv( URL, stringsAsFactors=FALSE )

head( dat )

Downtown Syracuse

All 42,000 Parcels

*** { @unit = “Due Aug 26th”, @title = “Discussion Topic: The Promise of Big and Open Data”, @assignment, @foldout }

The Promise of Big, Open Data

The world is simultaneously generating more data than it has ever before, as well as pushing for policies for making government data more accessible and democratic. These trends and movements is an important enabling aspect of data science, becuse it provides opportunity for real insights that can change our understanding of systems and allow us to hold institutions accountable.

So ignoring potential problems with big and open data for now, read about two interesting cases where big and open data have offered deep insights into city planning and human nature.

“A Data Analyst’s Blog Is Transforming How New Yorkers See Their City”, NPR, Nov 2018.

How a blog saved OK Cupid, FiveThirtyEight Blog, Nov 2014.

ASSIGNMENT:

For your discussion topic this week, find one data-driven blog post from Ben Wellington’s I Quant NY and/or OK Cupid’s OK Trends where you discovered something cool that you did not know, and share it with the group. In your post highlight what is interesting about the example, and what data made it possible.

Please post your reflection as a new pin on YellowDig.

You can also check out Ben’s Ted Talk, or this short interview.

*** { @unit = “Due Aug 29th”, @title = “Lab 01”, @assignment, @foldout }

Lab-01 - Practice with Vectors

Submit Solutions to Canvas:

** Week 2 - Operators and Descriptives

*** { @unit = “”, @title = “Week 2 Reflection Point”, @foldout }

Beginning

Nobody tells this to people who are beginners, and I really wish somebody had told this to me.

All of us who do creative work, we get into it because we have good taste. But it’s like there is this gap. For the first couple years that you’re making stuff, what you’re making isn’t so good. It’s not that great. It’s trying to be good, it has ambition to be good, but it’s not that good.

But your taste, the thing that got you into the game, is still killer. And your taste is good enough that you can tell that what you’re making is kind of a disappointment to you. A lot of people never get past that phase. They quit.

Everybody I know who does interesting, creative work they went through years where they had really good taste and they could tell that what they were making wasn’t as good as they wanted it to be. They knew it fell short. Everybody goes through that.

And if you are just starting out or if you are still in this phase, you gotta know its normal and the most important thing you can do is do a lot of work. Do a huge volume of work. Put yourself on a deadline so that every week or every month you know you’re going to finish one story. It is only by going through a volume of work that you’re going to catch up and close that gap. And the work you’re making will be as good as your ambitions.

I took longer to figure out how to do this than anyone I’ve ever met. It takes awhile. It’s gonna take you a while. It’s normal to take a while. You just have to fight your way through that.

—Ira Glass on failure

It’s easy when you start out programming to get really frustrated and think, “Oh it’s me, I’m really stupid,” or, “I’m not made out to program.” But, that is absolutely not the case. Everyone gets frustrated. I still get frustrated occasionally when writing R code. It’s just a natural part of programming. So, it happens to everyone and gets less and less over time. Don’t blame yourself. Just take a break, do something fun, and then come back and try again later.

—Hadley Wickham on advice to young and old programmers

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

This section introduces logical statements used to create custom groups from your data.

Learning Objectives

Once you have completed this section you will be able to

- translate human language phrases to a computer language

- create subsets of data

Assigned Reading

Required:

Group Construction with Logical Statements

Lab

Lab-02 covers the following topics:

- Logical operators

- Group construction

*** { @unit = “Due Sept 2nd”, @title = “Discussion Topic: A Tour of R Packages”, @assignment, @foldout }

A Tour of R Packages

You might not have heard, but nerd is the new black, data science is the sexiest job of the 21st century, and there is nothing hotter than learning R.

But what is R, and what are the nerds cool kids using it for?

This week, your task is to explore a few blogs about tools in R and find one package or application that you are excited about. It can be an analytics package, a graphics package, a specific application, or a tutorial on a topic that interests you. I don’t expect you use of understand the package or tutorial, rather just identify a tool that would be useful given your interests.

Mine, personally, was the package that allows you to create comic strip graphics in R:

Take note, this assignment asks you to explore a new community where the technical language is unfamiliar and the volume of information vast. There are currently over 15,000 packages available in R! Part of the goal of the assignment is to recognize the sheer volume of creativity in the R community and the scope of work that can be done with the language. But the immediate learning objective is to find some sources that make the content accessible. Here are a few to get you started:

Please post your reflection as a new pin on YellowDig:

*** { @unit = “Due Sept 5th”, @title = “Lab 02”, @assignment, @foldout }

Lab-02 - Constructing Groups

Read the following sections from the course chapter on groups before starting the lab:

1 Constructing Groups

- 1.1 Logical Operators

- 1.2 Selector Vectors

- 1.3 Usefulness of Selector Vectors

2 Subsets - 2.1 Compound Logical Statements

- 2.2 The Opposite-Of Operator

The rest of the chapter is useful information to come back to, but not needed for the lab. Similar to last week the chapter will highlight some easy ways to make errors with your code, not to convince you that R is hard, but rather to ensure you are paying attention to some subtle features of computer languages that can impact your data.

Submit Solutions to Canvas:

** Week 3 - Visualization

*** { @unit = “”, @title = “Week 3 Reflection Point”, @foldout }

Advice on Learning R

People naturally go through a few phases. When you start out, you don’t have many tips and techniques at your disposal. So, you are forced to do the simplest thing possible using the simplest ideas. And sometimes you face problems that are really hard to solve, because you don’t know quite the right techniques yet. So, the very earliest phase, you’ve got a few techniques that you understand really well, and you apply them everywhere because those are the techniques you know.

And the next stage that a lot of people go through, is that you learn more techniques, and more complex ways of solving problems, and then you get excited about them and start to apply them everywhere possible. So instead of using the simplest possible solution, you end up creating something that’s probably overly complex or uses some overly general formulation.

And then eventually you get past that and it’s about understanding, “what are the techniques at my disposal? Which techniques fit this problem most naturally? How can I express myself as clearly as possible, so I can understand what I am doing, and so other people can understand what I am doing?” I talk about this a lot but think explicitly about code as communication. You are obviously telling the computer what to do, but ideally you want to write code to express what it means or what it is trying to do as well, so when others read it and when you in the future reads it, you can understand some of the reasoning.

~ Hadley Wickham Advice to Young and Old R Programmers

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

This section introduces the Core R graphics engine.

Learning Objectives

Once you have completed this section you will be able to:

- Use the plot() function

- Build custom graphics with base graphing commands:

- points()

- lines(), abline()

- text()

- axis()

Assigned Reading

Required:

Please skim these chapters before starting your lab. Sample code has been provided for each lab question, but you may need the chapters and the R help files to find specific arguments.

The plot() Function

Building Custom Graphics

Suggested:

Help with R graphics:

Inspiration:

Lab

Lab-03 introduces the primary plotting functions used to build graphics.

- plot()

- points()

- lines(), abline()

- text()

- axis()

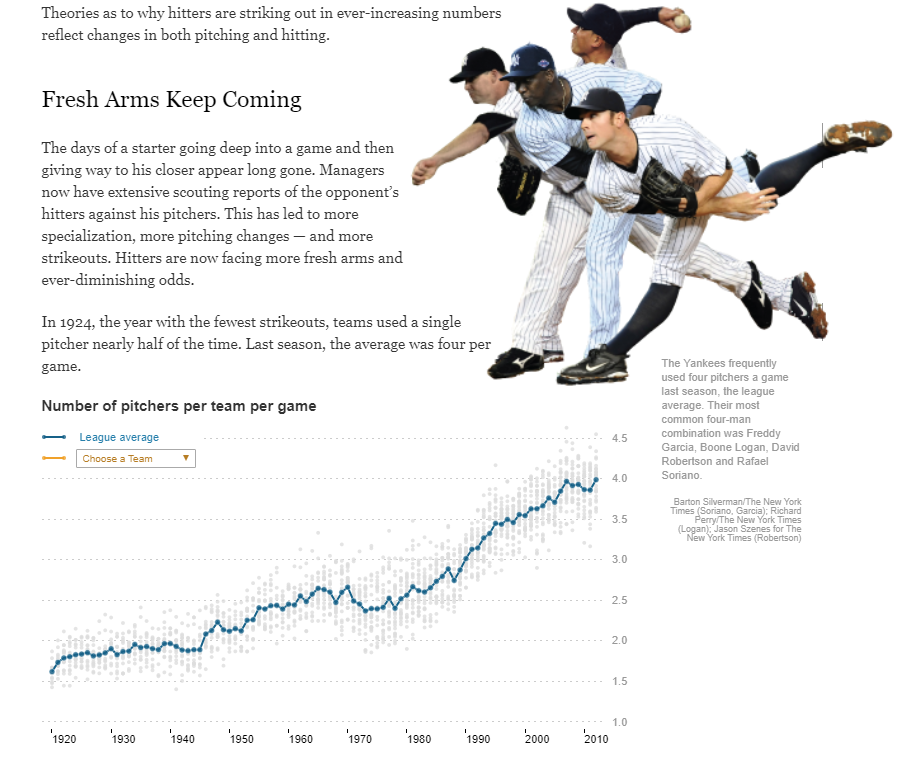

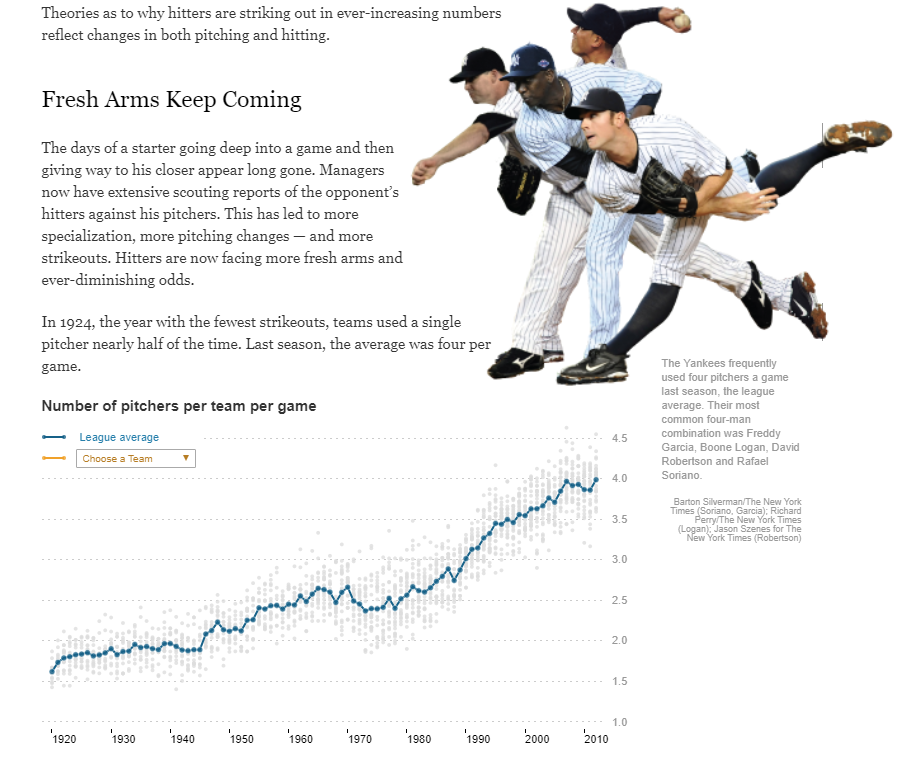

The lab requires you to re-create a graph that was featured in the New York Times:

*** { @unit = “MON Sept 9th”, @title = “Discussion Topic: Data Viz Packages”, @assignment, @foldout }

R Graphics Packages

This week you will begin working in the core R graphics engine. This discussion topic offers an opportunity to explore some of the myriad graphics packages in R.

Your task is to select a specialized graphic that you could use in your own (hypothetical) research or professional life, then describe what data or topic from your own work the visualization would be useful for. Reference the R package you would need for the task.

For example, I might say that I work creating budgets for a government organization. I could use a Sankey Diagram from the D3 Package to visualize our budget.

You will find sites like the R Graphs Gallery and The Data Viz Project helpful.

*** { @unit = “SUN Sept 15th”, @title = “Lab 03”, @assignment, @foldout }

Lab-03 - Graphics

This lab is designed to introduce you to core graphing functions in R by replicating a New York Times graphic.

You are advised to skim the chapters on graphing functions and custom graphics in R, but sample code has been provided for each step of the lab.

The plot() Function

Building Custom Graphics

Submit Solutions to Canvas:

** Week 4 - Dynamic Visualization

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

This section introduces the use of R Shiny widgets to make graphs dynamic.

Learning Objectives

Dynamic graphics allow a user to select parameters that change the visualization in some way. Graphics will update in real-time within a web browser.

By the end of this unit you will be able to:

- Construct widgets to allow users to select inputs.

- Convert static graphics to dynamic graphics using the Shiny package.

Assigned Reading

Read the notes on using R Shiny widgets and render functions to accept user input (widgets), and change graphics in response (render).

Lab

Lab 04 will again use the graph that was featured in the New York Times:

Try the interactive graphic at the NYT.

But we will now add an input widget that allows users to select one team that will be highlighted on the graph in yellow.

*** { @unit = “MON Sept 16th”, @title = “Discussion Topic”, @assignment, @foldout }

Bad Graphs

There is a lot of science behind data visualization, but the art to storytelling with data can be hard to distill into a few basic principles. As a result, it takes time to learn how to do it well. The best way to develop data visualization skill is to regularly consume interesting graphics. David McCandless is one of the best ambassadors for the field of graphic design and visualization. Check out his TED Talk, and some excerpts from his book Information is Beautiful.

Unfortunatly, it is much easier to create tragically bad graphics than it is to create good graphics. For your blog this week, read the Calling Bullshit overview on proportional ink and misleading axes to develop some sensitivity about misleading graphics.

Find a graph that violates one of these principles, or commits an equally egregious visualization crime. Share the graph and explain what offense has been committed. You might start by searching for “bad graphs” on google images.

This use of clowns in bar charts is one of my favorites. You might also enjoy pizza charts or these gems.

*** { @unit = “”, @title = “Demo of Shiny Widgets”, @foldout }

For more widget examples visit the R Shiny Widget Gallery and the R Shiny Gallery.

*** { @unit = “SUN Sept 22nd”, @title = “Lab 04”, @assignment, @foldout }

Lab-04 - Dynamic Graphics

This lab is designed to introduce you to R Shiny functions by adding a dynamic element to the NYT graphic from last week.

Submit Solutions to Canvas:

** Week 5 - Data Wrangling

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

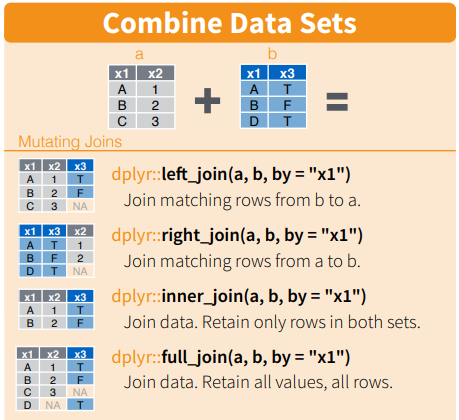

This unit focuses on the important task of “data wrangling”, various manipulations that allow you to quickly filter, join, sort, transform, and describe your data. The dplyr package and tidyverse tools are some of the most popular in R.

Learning Objectives

By the end of this unit you will be able to:

- Subset data by rows or columns

- Create multi-dimensional summary tables by grouping data

- Generate new variables through transformations of existing variables

- Write efficient “data recipes” using pipe operators

Assigned Reading

Read the notes on data wrangling in R:

You may also find the Data Wrangling Cheatsheet useful.

Lab

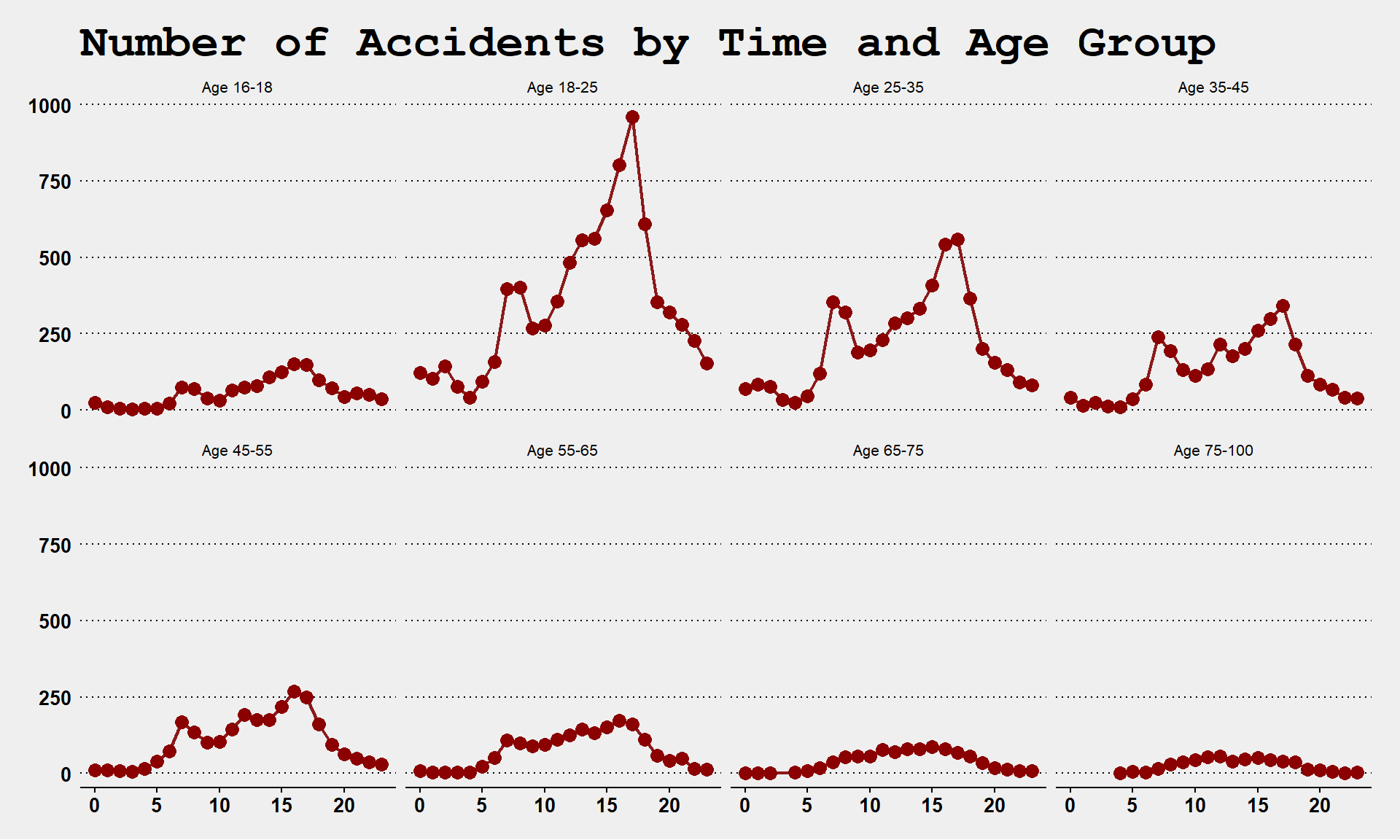

Lab 05 will use data on traffic accidents in the City of Tempe:

*** { @unit = “TUES Sept 24th”, @title = “Discussion Topic Data APIs”, @assignment, @foldout }

Data APIs

Part of the reason data science has grown so much as a field in recent years is because of advances in computing technologies that allows us to run powerful programs and to work with large datasets on personal computers. But just as important, data has become so ubiquitous, cheap, and valuable for organizations.

Your skill level in data science can be measured by how quickly you can take a real-world problem and produce analysis that offers better solutions than the status quo. Analyzing the data is important, but the process of obtaining data is not a trivial step. Having knowledge about where to look for data, or how to augment your existing data, will help you be more effective as an analyst.

Next week we will discuss some ways to get data into R. You can always download data from a website in its current format (CSV, SPSS, or Stata, etc.) then import it into R. Alternatively, it is typically more efficient to use an API.

API stands for “Application Programming Interface”, which is computer science jargon for the protocols that allow two applications to speak to each other. If you are using your mobile phone and you want to log into your bank using an app, an API will send your user credential and password to your bank, and will return information about your balances and transactions. In other words, APIs are structured ways of sending requests back and fourth between systems. The provide permission for external users to query some parts of internal databases (e.g. what is my checking account balance?), and control what information will be returned.

In some cases, organizations that host public datasets have created data APIs to make it easier to request and share the data. To see some examples visit the Data Science Toolkit website, and test out some APIs. In some cases you give some search parameters (such as a zip code), and it returns a new dataset (census data). In other cases, you send data (raw text), and the API sends you a processed version of the data (a sentiment score based upon words in the text). Thus APIs can be used both to access new data sources, as well as to clean or process your current data as part of your project.

The R community has made a lot of APIs easier to use by creating packages that allow you to access data directly in R using custom functions. For example, the Twitter package allows you to request tweets from specific dates and users, and sends back a dataset of all tweets that meet your criteria (with limits on how much you can access at a time).

R packages translate the API into functions that will translate your request into the correct API format, send the request, return the data directly into R, and often convert it into an easy to use format like a data frame. In this way, you can quickly access thousands of datasets in real time through R, and you can also store your requests in scripts for future use.

For the discussion topic this week find an example of an API that could be useful for your work. For example, I use a lot of federal data. I was excited to learn that the website Data USA has created a public API that allows users to access over a dozen federal datasets:

You can find APIs through a Google search, or browse datasets on the DS4PS Open Data page. Altnernatively, you can report on a package in R that uses a data API and describe what kinds of data the package allows you to access.

Note, you do not have to show how to use the API for the post, just identify what information is accessible and how it might be used.

*** { @unit = “SUN Sept 29th”, @title = “Lab 05”, @assignment, @foldout }

Lab-05 - Data Wrangling

This lab offers practice analyzing traffic accident patterns using dplyr data wrangling functions.

Note, this data will be used for your final dashboard. This assignment is a preview of the types of analysis and graphics you might report on the dashboard.

Submit Solutions to Canvas:

** Week 6 - Data IO and Joins

*** { @unit = “”, @title = “Unit Overview”, @foldout }

Description

This week has you continue practicing “data wrangling”. This week will add the step of joining multiple datasets prior to analysis. We will continue to use the dplyr package.

Learning Objectives

By the end of this unit you will be able to:

- Merge two related datasets using join functions

- Identify appropriate keys for joins

- Determine whether you need an inner, outer, or full join

Assigned Reading

Read the notes on data joins:

For reference:

Lab

Lab 06 will use Lahman data on baseball for some moneyball examples.

We will join the Salaries table to player bios (Master table) and performance data (Batting and Fielding) to assess which characteristics predict salary and which teams have been able to most efficiently convert salary to wins.

*** { @unit = “MON Sept 30th”, @title = “Discussion Topic”, @assignment, @foldout }

GitHub for Government

Hear me out. The government is just one big open-source project.

Except currently the source code is only edited irregularly by one giant team, and they debate every single change, and then vote on it.

The code is now millions of lines long, and most of it doesn’t do what it was originally designed for, but it is too exhausting to make changes so they just leave it. There are lots of bugs, and many features do not work.

Despite the flaws, the code somehow still functions (albeit very slowly now, like really slow), and the fan is making funny noises, and sometimes we get a blue screen during the budget process and it shuts down for a few weeks. But when it is re-started, it still kinda works.

It’s an imperfect metaphor, but many people have theorized that government can learn a lot from how open source projects are managed (or governed if we are being precise).

There’s been some uptake of these ideas:

http://open.innovatesf.com/openlaw/

GitHub’s official Government Evangelist

Ben Balter wants to get all up in the U.S. government’s code, and he thinks you should be able to as well. Balter, a Washington, D.C.-based lawyer, is GitHub’s official Government Evangelist. His purpose: to educate government agencies about adopting open-source software.

My favorite Ben Balter project was something simple. GIS files are notoriously large and hard to work with (for some reason GIS shapefiles still split data into five separate files that you have to keep together for them to work properly).

The open source community has created some better data structures that are more efficient and easier to share (geoJSON files), but the geographers that work for cities were all trained on ArcGIS products so it’s all they know! Ben wrote a script that downloaded all of Washington DC’s open data files, converted them to better formats, then uploaded them to GitHub so others have access.

https://github.com/benbalter/dc-maps

It might seem trivial - but geoJSON files can be read into R directly from GitHub, making it easy to deploy the data for a wide variety of purposes:

library( geojsonio )

library( sp )

github <- "https://raw.githubusercontent.com/benbalter/dc-maps/master/maps/2006-traffic-volume.geojson"

traffic <- geojson_read( x=github, method="local", what="sp" )

plot( traffic, col="steelblue" )

traffic data from dc

For this week, read about how GitHub has evolved to support government.

Do you think open source frameworks would help open the black box and make government more accessible? Would government become more accessible, or alienate regular citizens that are not computer scientists? Would it make influence from special interests more transparent, or would it make it easier for them to hijack the process of shaping local laws?

*** { @unit = “FRI Oct 4th”, @title = “Lab 06”, @assignment, @foldout }

Lab-06 - Data Joins

This lab is designed to introduce you to primary data join functions in R.

Submit Solutions to Canvas:

** Week 7 - Dashboards

*** { @unit = “”, @title = “Reflection”, @foldout }

“With very few exceptions, there is no shortcut between not knowing something and knowing it. There is a beauty to awkwardness, a wisdom in the wobble.”

~Maya Stein

*** { @unit = “FRI Oct 11th”, @title = “Code-Through Assignment”, @assignment, @foldout }

Code-Through

Since you are sharing your code-through with your classmates on Yellowdig, it will serve as your discussion topic this week.

If you send me your RMD and HTML files via email, I will post them to GitHub and create a link for you if you would like to share a URL instead of a file.

Submit to Canvas:

Post on Yellowdig

*** { @unit = “FRI Oct 11th”, @title = “FINAL PROJECT”, @assignment, @foldout }

Create a Data Dashboard in R

Working with the crash data from Lab-05, you will extend the work you began in Lab-04 by creating a dynamic data dashboard that will be used to search for patterns in Tempe crash data.

Submit to Canvas:

Please note that I will be traveling on Friday Oct 11th, so response to questions will be slow if you wait until Friday.