Fixed effects

Packages

Here are the packages we will use in this lecture. If you don’t remember how to install them, you can have a look at the code.

- plm for fixed effect model

- car and gplots for graphs

- stargazer for nice tables

install.packages( "plm" )

install.packages( "stargazer" )

install.packages( "scales" )

install.packages( "car" )

install.packages( "gplots" )

install.packages( "fastDummies" )What you will learn

- What is the difference between an OLS, fixed, and random effect model?

- How do you interpret the effect of X on Y in a fixed effect model?

- Which data structure do you need to run a fixed effect model?

- How to run a fixed effect model in R

1 The key concept

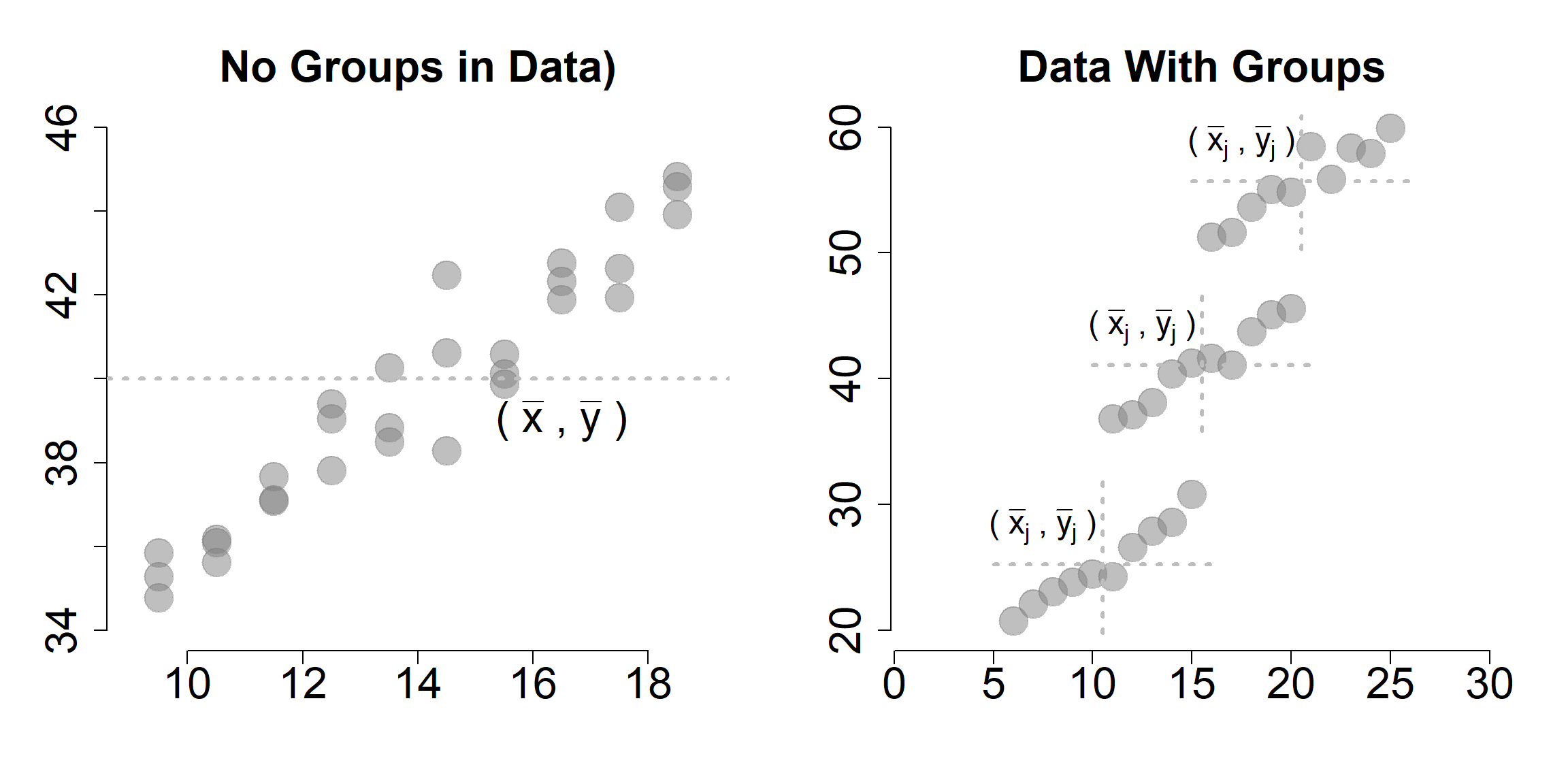

We will start with a very simple example to illustrate the idea of within and between variation, which is the key to understanding the fixed effect model.

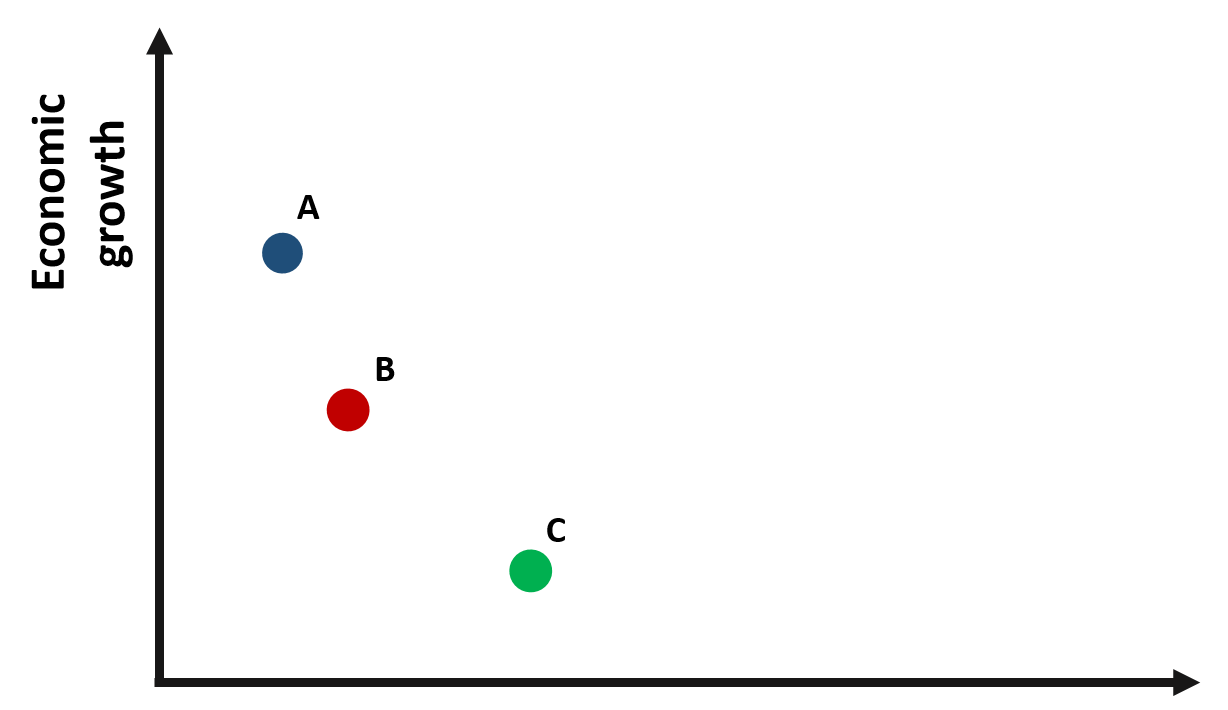

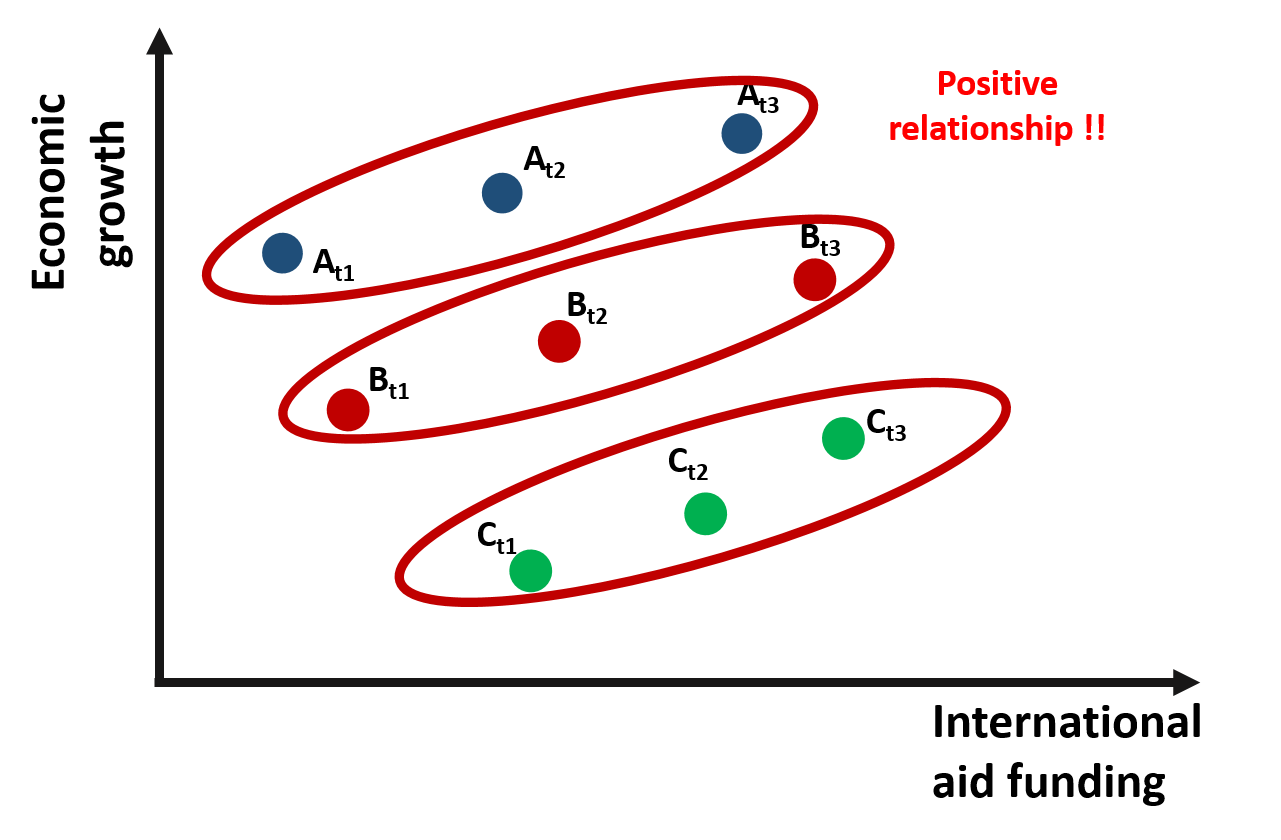

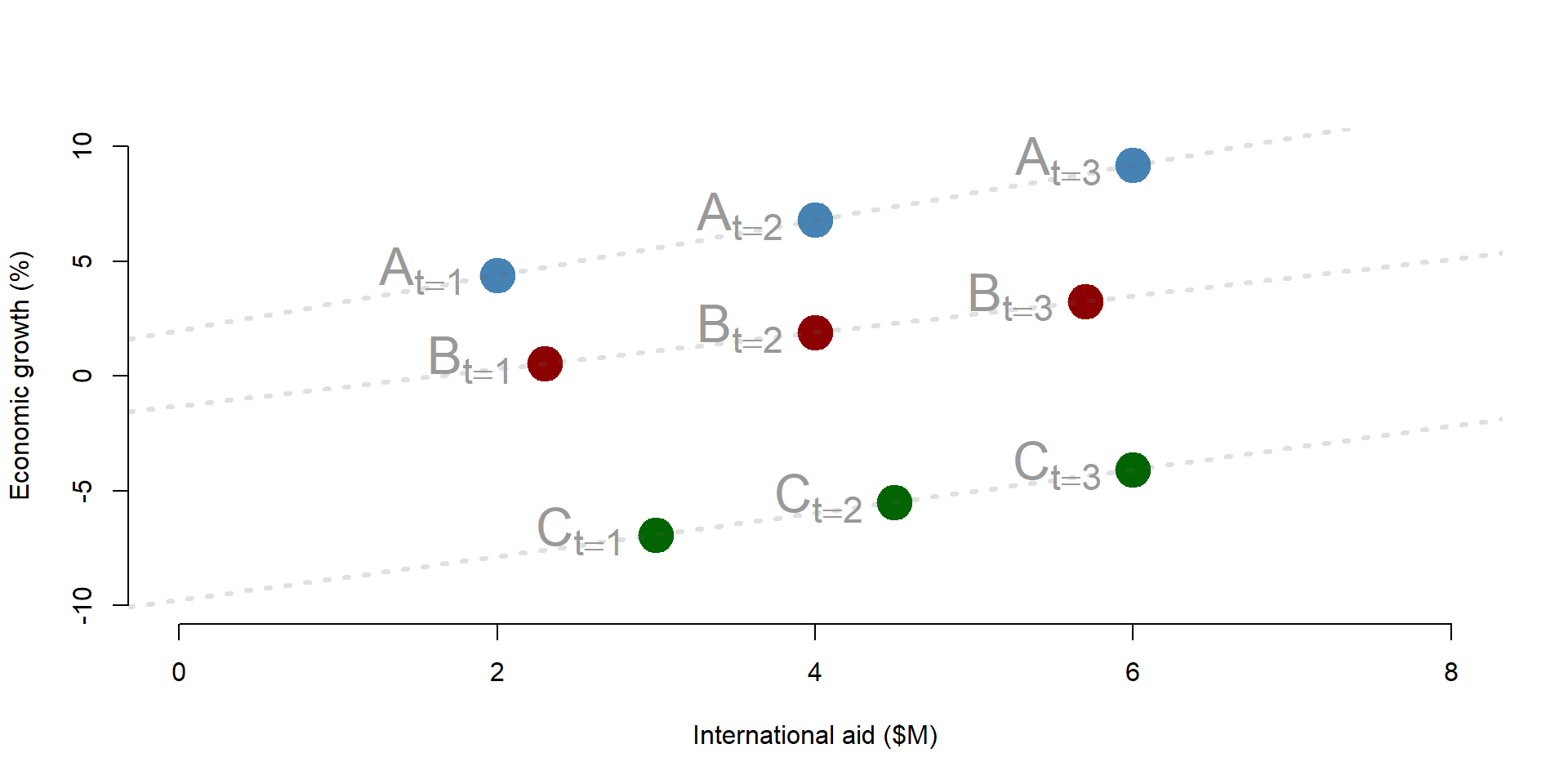

Let’s imagine to have data about three different countries. We want to understand the relationship between international aid funding received by the country (policy variable) and economic growth (outcome variable).

Figure 1.1: Relationship between economic growth and international aid

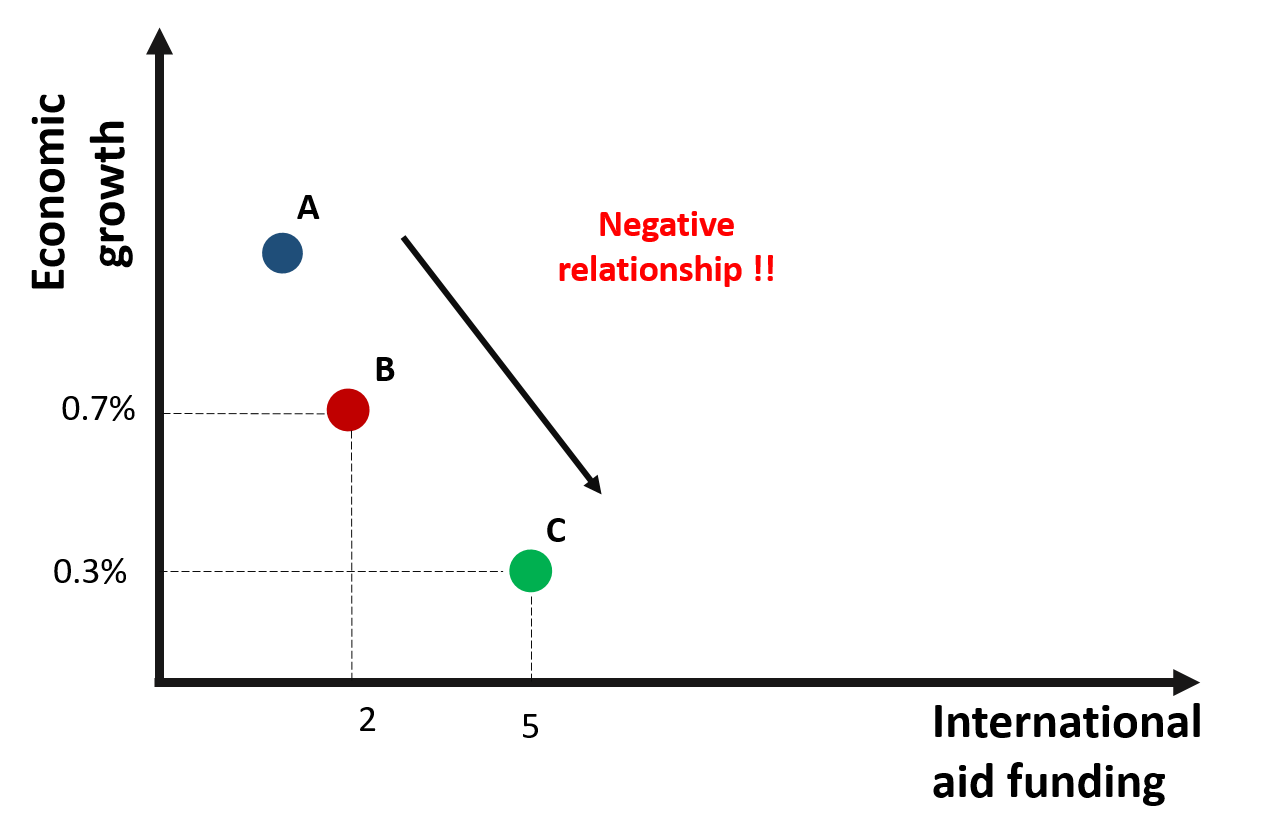

Based on the graph, we would expect a negative relationship between x and y: countries receiving greater international aid report a lower growth. For instance, country C receives more funding than country B but its growth it’s less than half of country B.

Figure 1.2: A negative relationship between growth and international aid?

Are we supposed to conclude that international aid has a negative effect on growth?

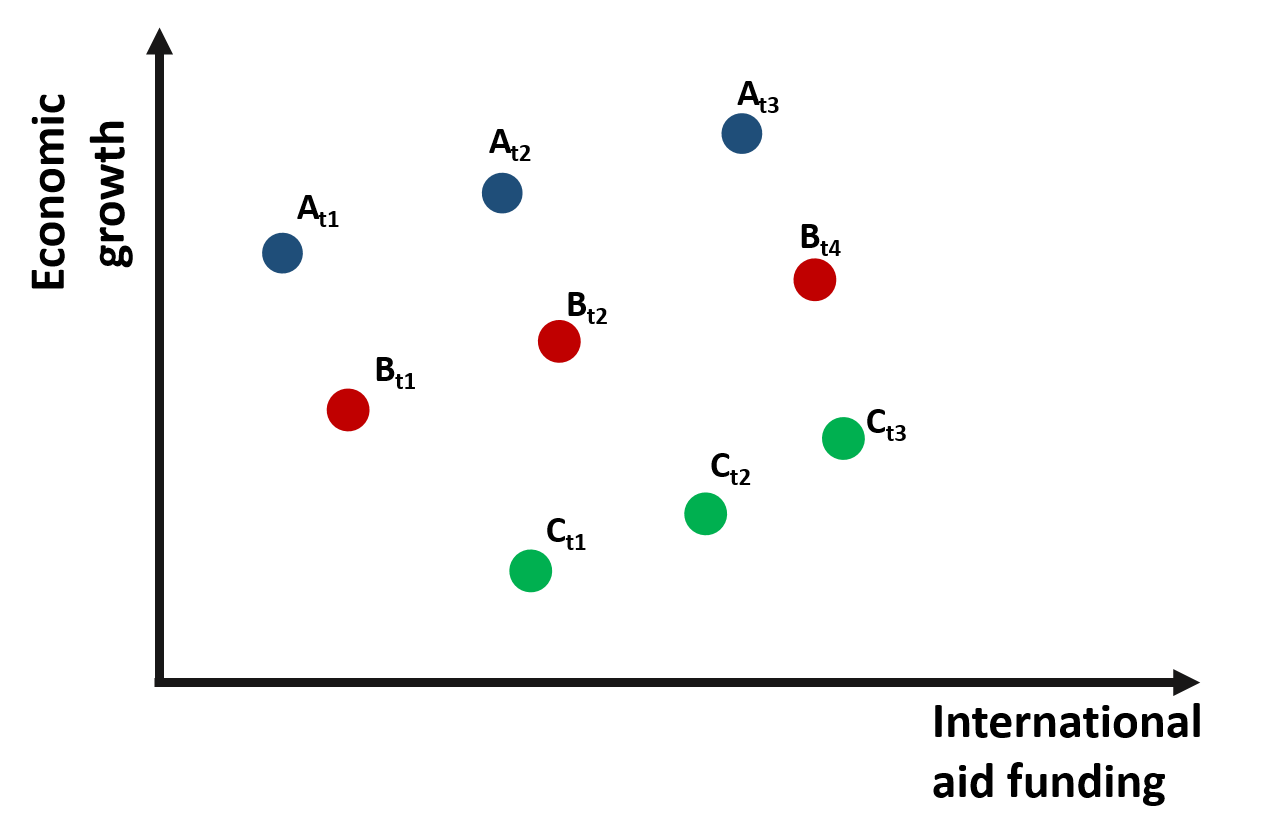

A way to confirm this result is to collect data about each country over an extended period of time. In this way, we move from cross-sectional data to panel data, where each variable in the datset is observed along a given time period.

The small “t” next to each observation indicates the time at which data were collected.

The graph 1.3 represents observations from three countries across three time periods.

Figure 1.3: Relationship between growth and international aid: panel data

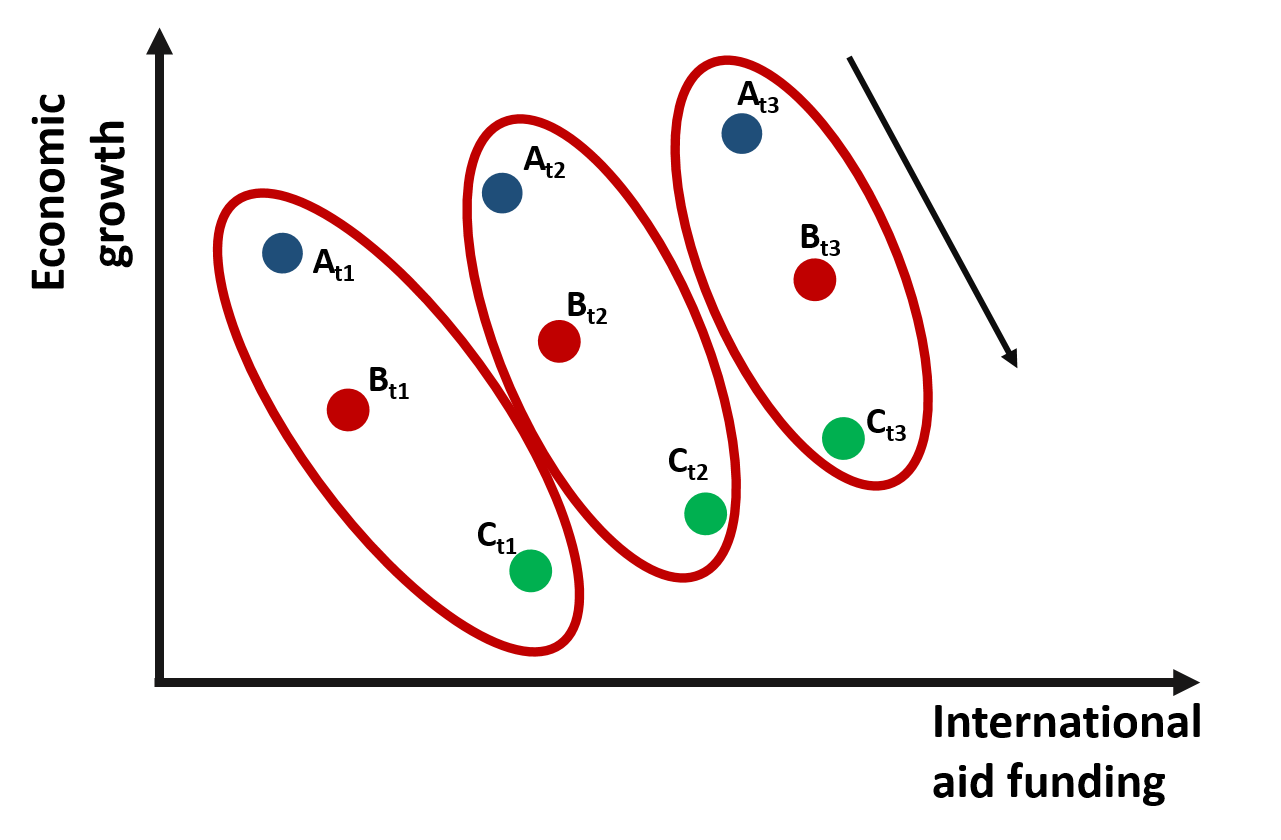

If we consider the relationship between international aid and growth within each time period and across the 3 countries, the relationship is once again negative.

Figure 1.4: Relationship between growth and international aid: Across countries variation

However, if we change the way we look at the data and consider the relationship between international aid and growth within one single country and across time, we would observe a positive relationship.

Figure 1.5: Relationship between growth and international aid: Within countries variation

Why does the relationship change so much?

One possible explanation is that country C is poorer and, because of that, it receives more funding than the other countries. However, when we compare country C against other countries, its growth is still limited. As results, across countries variation is negative. The trend is positive only if we compare growth over time within each country. As country C receives more funding, its economic growth improves.

This statistical phenomenon is known as the Simpson’s Paradox, where trends are different depeding on whether data are observed by groups or at the aggregate level (you can read more about the Simpson’s paradox here and here).

As we will see in the remain of the lecture, fixed effect wil enable us to consider differences across groups so that we can estimated the within effect of the policy intervention.

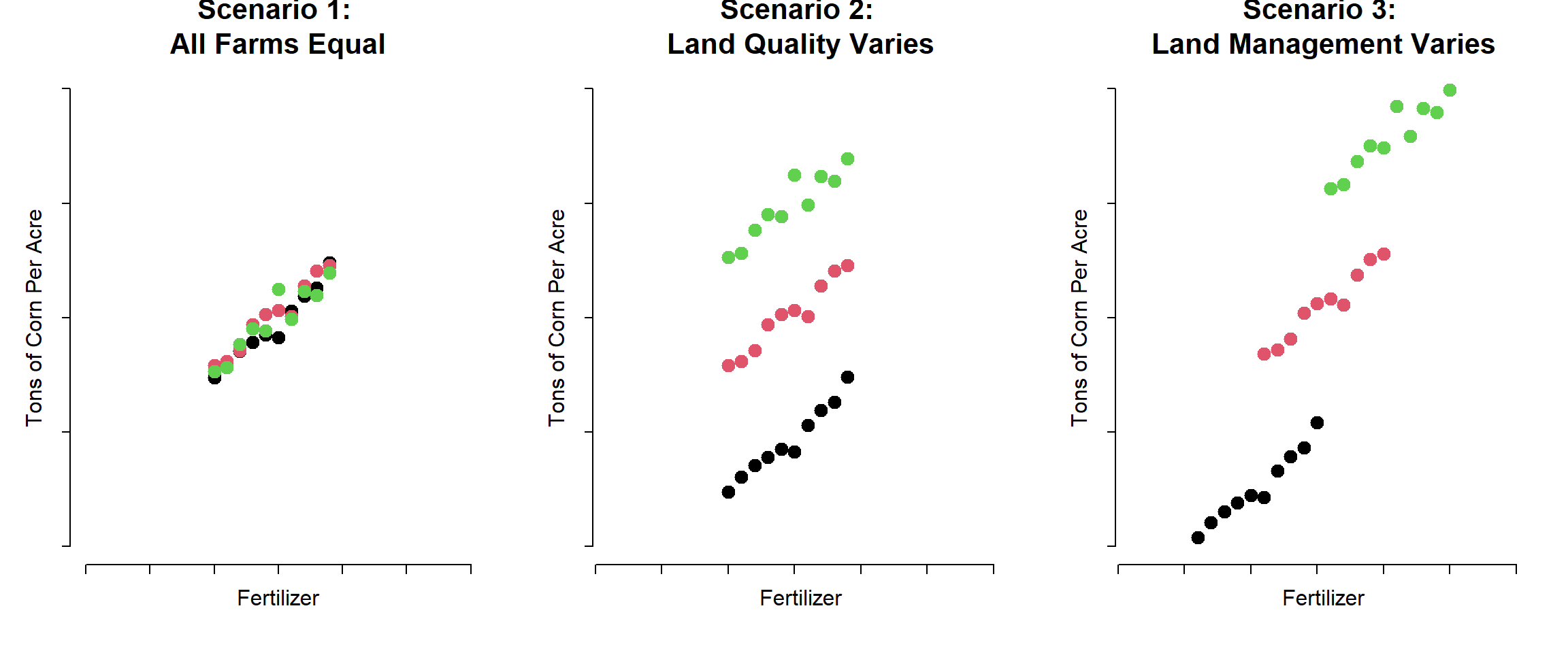

Mundlak (1965) presented one of the first examples of solvig “management bias” in estimations using fixed effects.

# demo datasets farms:

dat.ols <-

structure(list(corn = c(34.7827346624912, 36.0996074731855, 37.0800275276849,

37.8215116009867, 38.5035794734048, 38.2831528023024, 40.5949079497628,

41.8842896575634, 42.6289072975422, 44.8224159482085, 35.8513545057719,

36.1712274222178, 37.1238755672832, 39.4036829336085, 40.2581671356454,

40.6281033803834, 40.1122944621368, 42.7577507798798, 44.0876312272813,

44.5755763696388, 35.2870931624421, 35.6245712674313, 37.6634175421797,

39.0413450336074, 38.8352805278445, 42.4753832533224, 39.8812959780677,

42.3251713544568, 41.92766784451, 43.9103522153667), fertilizer = c(10,

11, 12, 13, 14, 15, 16, 17, 18, 19, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19), org.id = structure(c(1L,

1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 2L, 2L, 2L, 2L, 2L, 2L, 2L,

2L, 2L, 2L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L), .Label = c("farm-1",

"farm-2", "farm-3"), class = "factor")), class = "data.frame", row.names = c(NA,

-30L))

dat.re <-

structure(list(corn = c(24.7827346624912, 26.0996074731855, 27.0800275276849,

27.8215116009867, 28.5035794734048, 28.2831528023024, 30.5949079497628,

31.8842896575634, 32.6289072975422, 34.8224159482085, 35.8513545057719,

36.1712274222178, 37.1238755672832, 39.4036829336085, 40.2581671356454,

40.6281033803834, 40.1122944621368, 42.7577507798798, 44.0876312272813,

44.5755763696388, 45.2870931624421, 45.6245712674313, 47.6634175421797,

49.0413450336074, 48.8352805278445, 52.4753832533224, 49.8812959780677,

52.3251713544568, 51.92766784451, 53.9103522153667), fertilizer = c(10,

11, 12, 13, 14, 15, 16, 17, 18, 19, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19), org.id = structure(c(1L,

1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 2L, 2L, 2L, 2L, 2L, 2L, 2L,

2L, 2L, 2L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L), .Label = c("farm-1",

"farm-2", "farm-3"), class = "factor")), class = "data.frame", row.names = c(NA,

-30L))

dat.fe <-

structure(list(corn = c(20.7827346624912, 22.0996074731855, 23.0800275276849,

23.8215116009867, 24.5035794734048, 24.2831528023024, 26.5949079497628,

27.8842896575634, 28.6289072975422, 30.8224159482085, 36.8513545057719,

37.1712274222178, 38.1238755672832, 40.4036829336085, 41.2581671356454,

41.6281033803834, 41.1122944621368, 43.7577507798798, 45.0876312272813,

45.5755763696388, 51.2870931624421, 51.6245712674313, 53.6634175421797,

55.0413450336074, 54.8352805278445, 58.4753832533224, 55.8812959780677,

58.3251713544568, 57.92766784451, 59.9103522153667), fertilizer = c(6,

7, 8, 9, 10, 11, 12, 13, 14, 15, 11, 12, 13, 14, 15, 16, 17,

18, 19, 20, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25), org.id = structure(c(1L,

1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 1L, 2L, 2L, 2L, 2L, 2L, 2L, 2L,

2L, 2L, 2L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L, 3L), .Label = c("farm-1",

"farm-2", "farm-3"), class = "factor")), class = "data.frame", row.names = c(NA,

-30L))

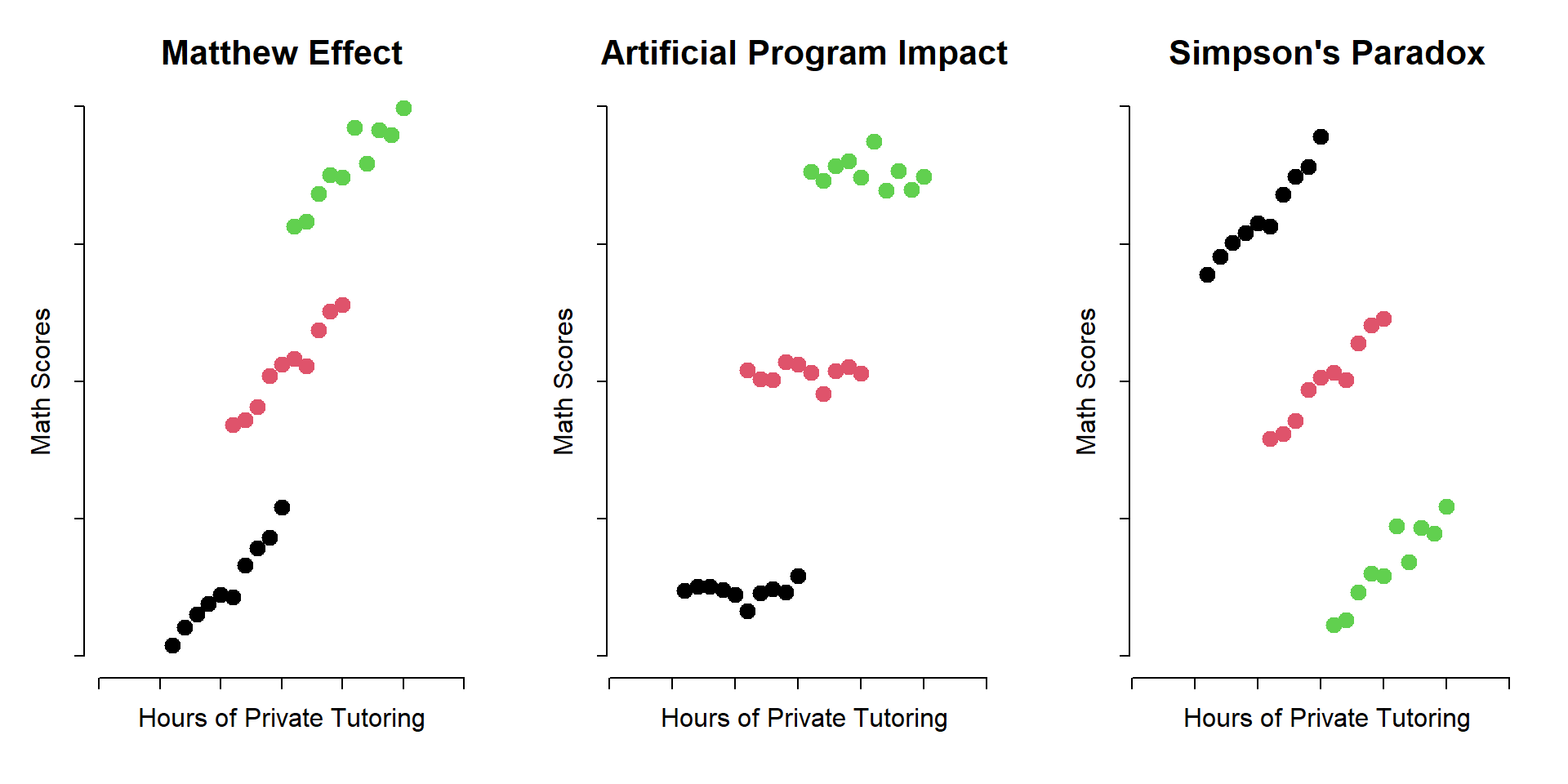

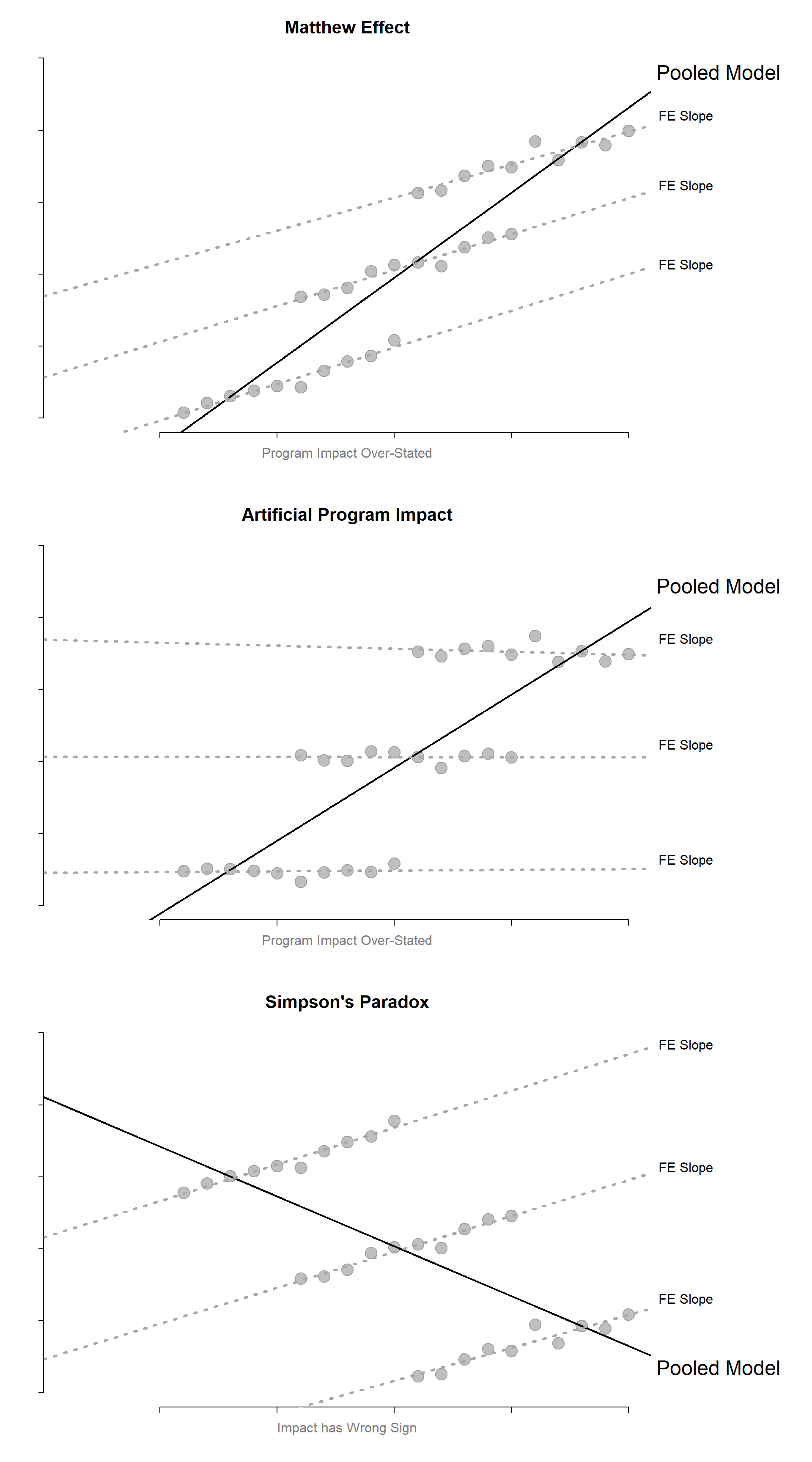

1.1 Types of Bias

1.2 OLS, demeaning, and fixed effects.

We will continue our example and look at some numbers to better understand differences between OLS and fixed effects.

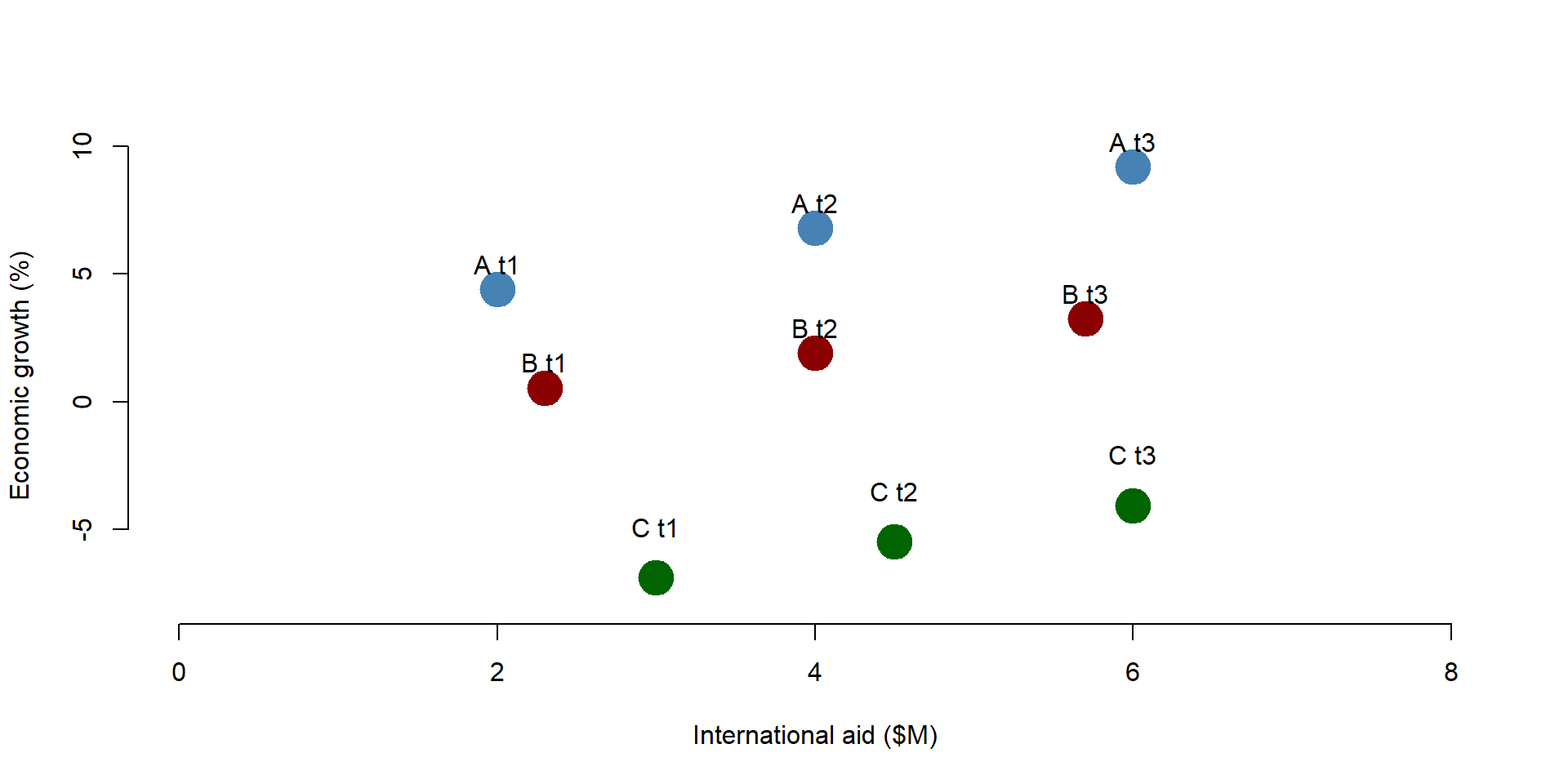

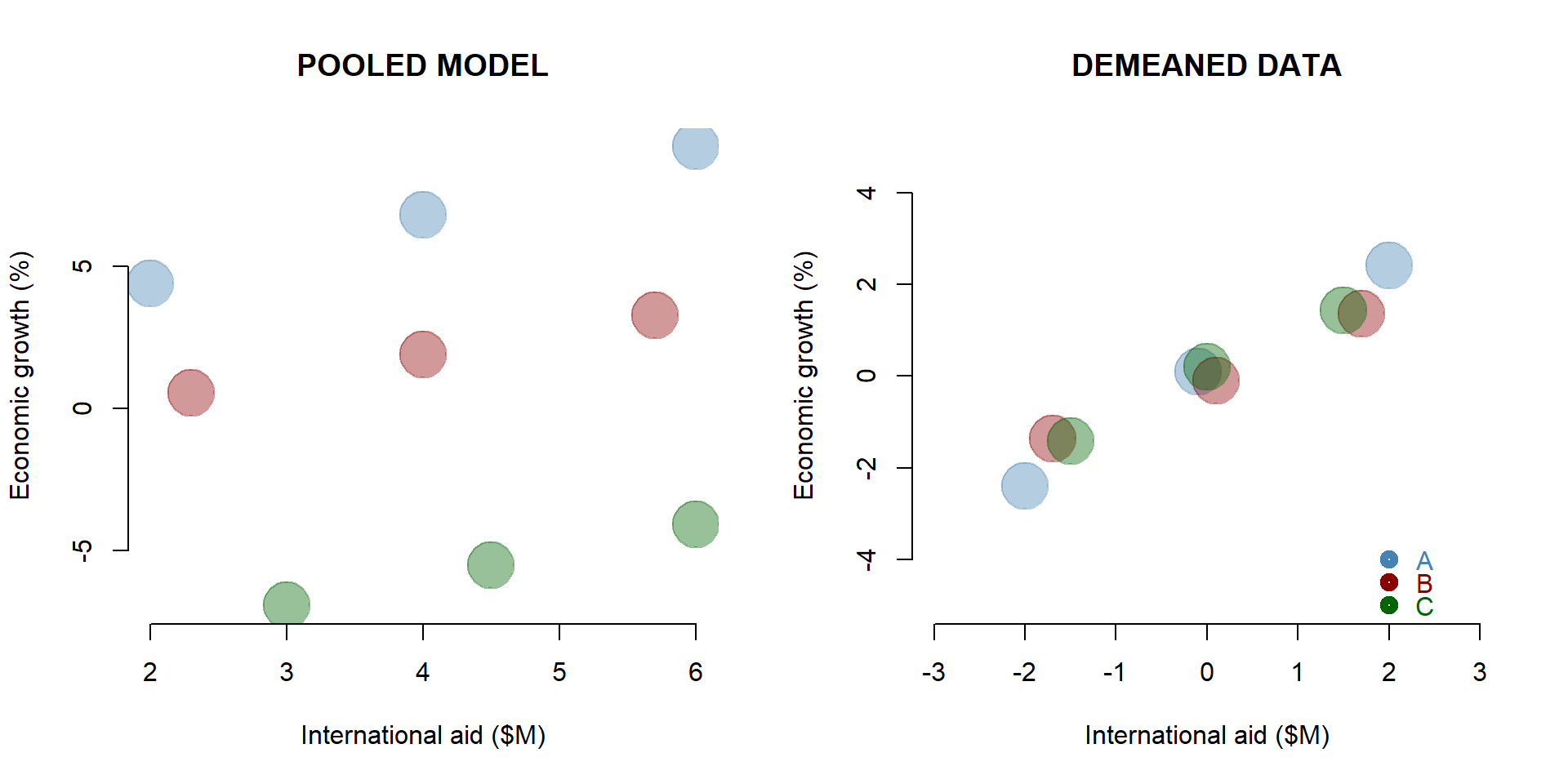

Let’s take a look at a simulated dataset that replicates the example illustrated in figure 1.3. We plot the observations on a graph. We have three countries (A, B, C) and we observe the relationship between international aid and economic growth at three points in time.

# development aid example

data <-

structure(list(x = c(2, 4, 6, 2.3, 4, 5.7, 3, 4.5, 6), y = c(4.4,

6.8, 9.2, 0.54, 1.9, 3.26, -6.92, -5.495, -4.07), g = structure(c(1L,

1L, 1L, 2L, 2L, 2L, 3L, 3L, 3L), .Label = c("a", "b", "c"), class = "factor"),

t = c(1, 2, 3, 1, 2, 3, 1, 2, 3)), class = "data.frame", row.names = c(NA,

-9L))palette( c( "steelblue", "darkred", "darkgreen" ) )

plot( x, y, bty="n", pch=19, cex=3,

ylim = c(-10,10), xlim = c(0,8),

col = as.factor(data$g),

ylab = "Economic growth (%)",

xlab = "International aid ($M)")

id.label <- paste0( toupper( data$g ), "[t==", data$t, "]" )

abline( lm(y~x, subset=(g=="a") ), lwd=3, lty=3,

col=adjustcolor("gray40", alpha=0.2) )

abline( lm(y~x, subset=(g=="b") ), lwd=3, lty=3,

col=adjustcolor("gray40", alpha=0.2) )

abline( lm(y~x, subset=(g=="c") ), lwd=3, lty=3,

col=adjustcolor("gray40", alpha=0.2) )

text(data$x, data$y, parse( text=id.label ),

col="gray60", pos=2, cex=2, offset=1 )

Figure 1.6: Simulated dataset: Economic growth and international aid

1.2.1 OLS model

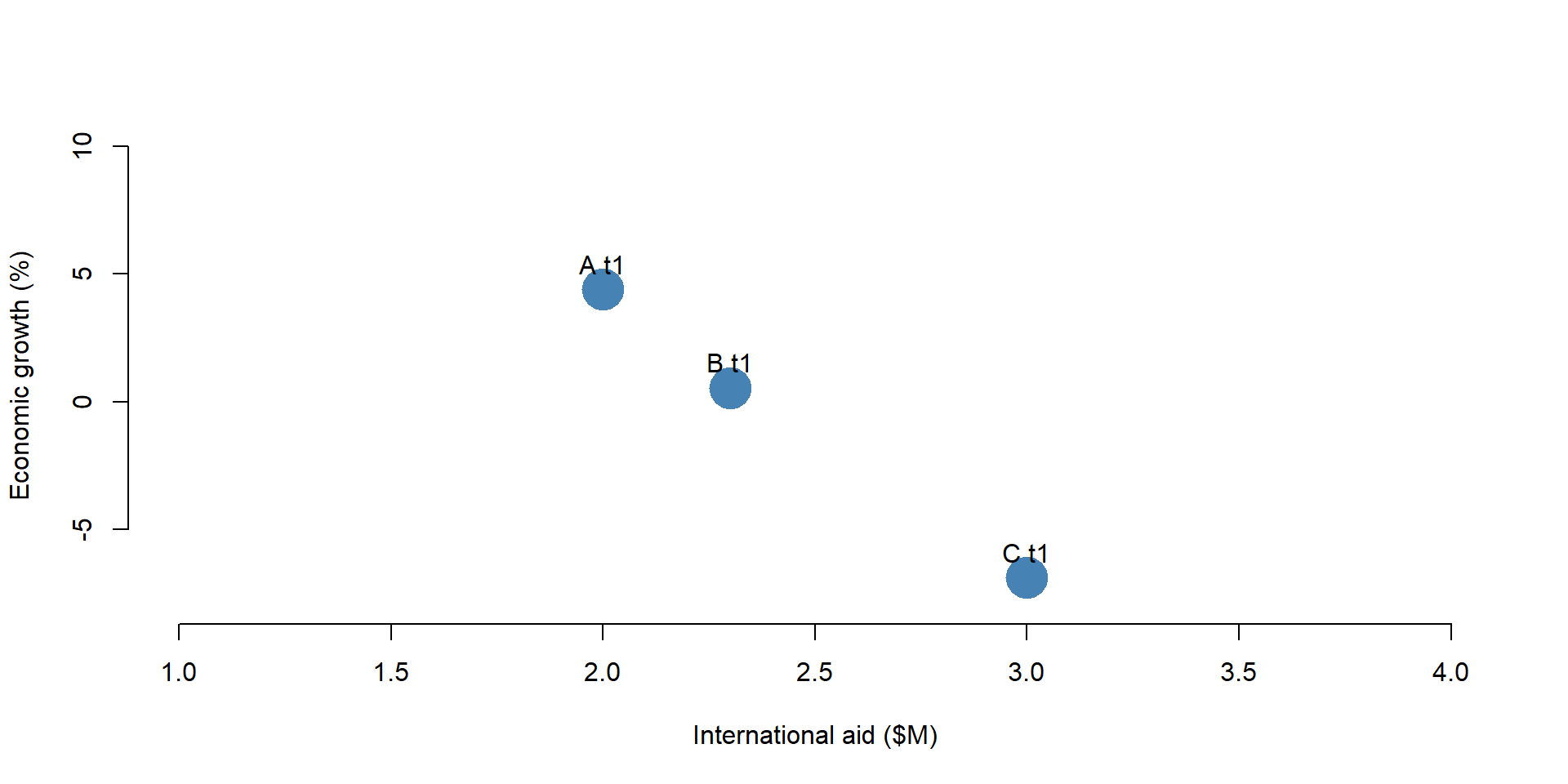

First, we estimate the relationship between x and y considering only one year of data (cross-sectional dataset as in figure 1.2). We can use an OLS model.

The OLS results show that the coefficient of International Aid is significantly and negatively correlated with Economic Growth. It suggests that for each additional milion received in international aid funding, economic growth decreases by 11%.

palette( c( "steelblue", "darkred", "darkgreen" ) )

plot( x[data$t ==1], y[data$t==1], pch=19, cex=3, bty="n",

ylim = c(-8,10), xlim = c(1,4),

col = as.factor(data$g), lwd = 5,

ylab = "Economic growth (%)",

xlab = "International aid ($M)")

abline(y[data$t == 1] ~ x[data$t == 1], col = "red")

text(data$x[1], data$y[1]+1, "A t1")

text(data$x[4], data$y[4]+1, "B t1")

text(data$x[7], data$y[7]+1, "C t1")

Figure 1.7: Simulated dataset: Year = 1

OLS <- lm ( y[data$t == 1] ~ x[data$t == 1] )

stargazer( OLS,

type = "html",

dep.var.labels = ("Economic growth (T1)"),

column.labels = c("Cross-Sectional OLS"),

covariate.labels = c("Intercept", "International aid - Year 1"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |

| Economic growth (T1) | |

| Cross-Sectional OLS | |

| Intercept | 26.60** |

| (1.26) | |

| International aid - Year 1 | -11.20** |

| (0.51) | |

| Note: | p<0.1; p<0.05; p<0.01 |

1.2.2 Pooled OLS model

Since we have data across multiple years, we can also use a pooled OLS regression, where we use all observations across years to predict Economic Growth (as in figure 1.3).

We compare results across a cross-sectional OLS and a pooled OLS model. The pooled OLS regression indicates that international aid has no effect on economic growth; the beta coefficient is not significantly different from zero.

Results are substantially different between the cross-sectional and the pooled OLS model.

palette( c( "steelblue", "darkred", "darkgreen" ) )

plot(x, y, pch=19, cex=3, bty="n",

col = factor(data$g),

ylim = c(-8,10), xlim = c(0,8),

ylab = "Economic growth (%)",

xlab = "International aid ($M)")

text(data$x[1], data$y[1]+1, "A t1")

text(data$x[2], data$y[2]+1, "A t2")

text(data$x[3], data$y[3]+1, "A t3")

text(data$x[4], data$y[4]+1, "B t1")

text(data$x[5], data$y[5]+1, "B t2")

text(data$x[6], data$y[6]+1, "B t3")

text(data$x[7], data$y[7]+2, "C t1")

text(data$x[8], data$y[8]+2, "C t2")

text(data$x[9], data$y[9]+2, "C t3")

Figure 1.8: Simulated dataset: All observations

Pooled_OLS = lm ( y ~ x )

stargazer( OLS, Pooled_OLS,

type = "html",

dep.var.labels = ("Economic growth"),

column.labels = c("Cross-Sectional OLS", "Pooled OLS"),

covariate.labels = c("Intercept", "International aid - Year 1", "International aid"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | ||

| Economic growth | y | |

| Cross-Sectional OLS | Pooled OLS | |

| (1) | (2) | |

| Intercept | 26.60** | -0.85 |

| (1.26) | (6.02) | |

| International aid - Year 1 | -11.20** | |

| (0.51) | ||

| International aid | 0.46 | |

| (1.37) | ||

| Note: | p<0.1; p<0.05; p<0.01 | |

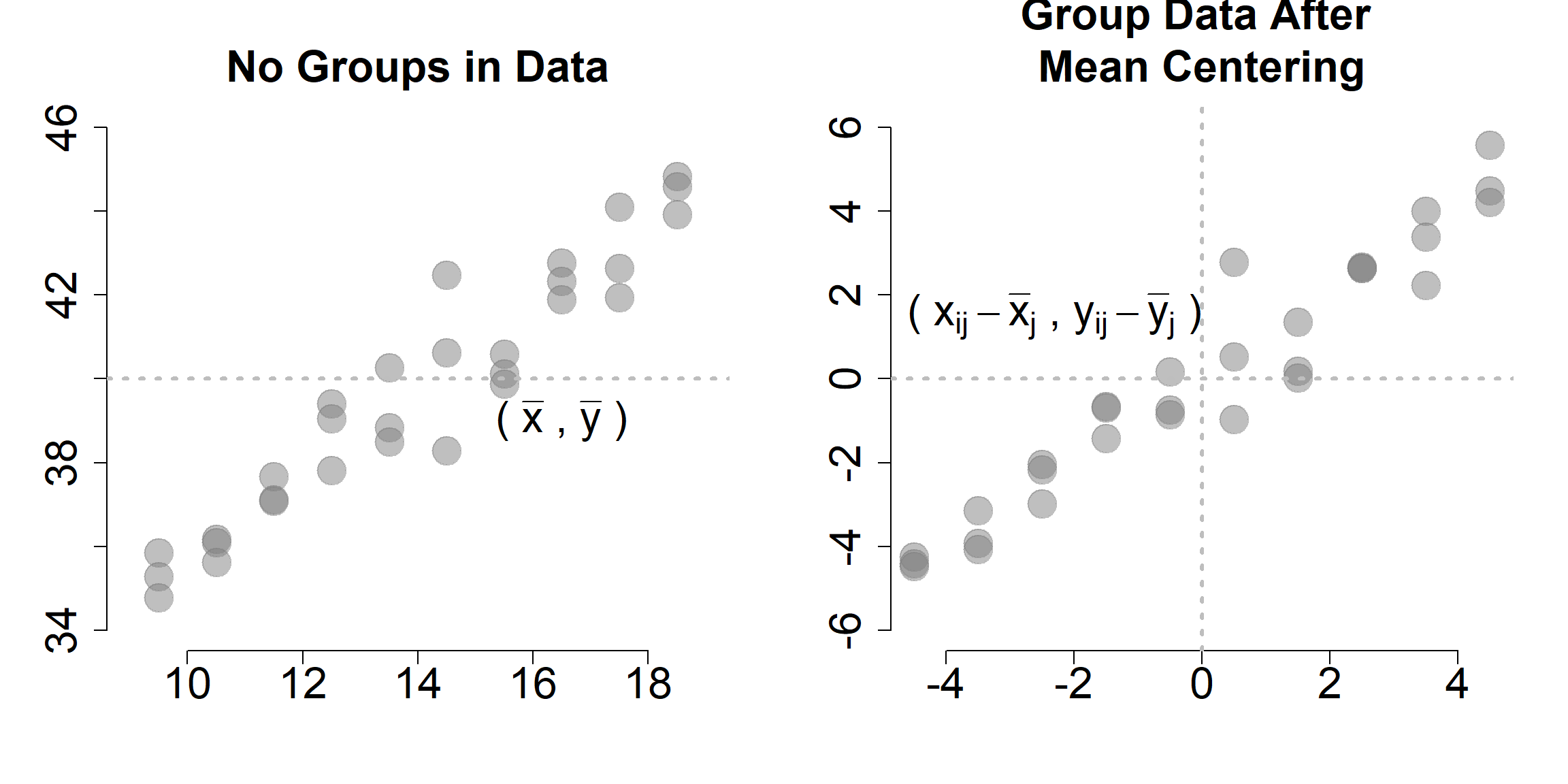

1.2.3 De-meaned OLS model

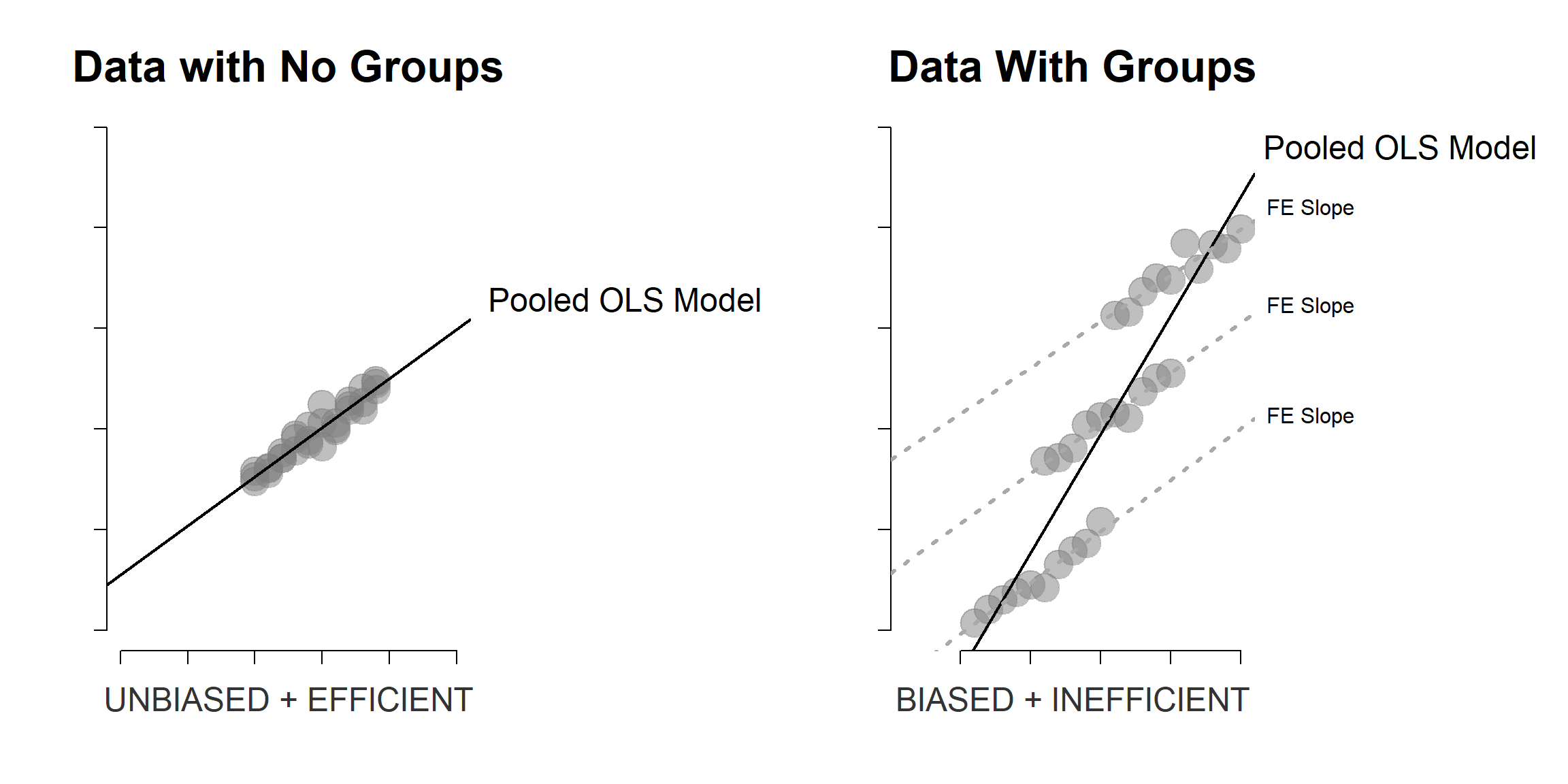

De-meaning the data by groups transforms it into something that looks like regular regression data.

As we discussed before, OLS can be problematic with panel data because it takes into account across-country variation. The effect of international aid on growth is negative because the overall trend across countries is negative. Countries which receive more funding (like Country C) report a lower economic growth. To observe the true effect of international aid, we need to remove the across-country variation. We can do it by demeaning the data.

De-meaning requires you to substract the mean from each variable. In this case, we are interested in demeaning by group, so that the values of x and y are centered around each group mean. You can observe the process in table 1.5.

By demeaning the data, we remove across-country variation: the mean of each country across years is now equal to 0.

The mean value of Economic Growth in group A is 4. We substract 4 from each value of Economic Growth within group A (2, 4, 6) and obtain -2, 0, and 2. We repeat the same process for each group, and for both Economic Growth (x) and International Aid (note: you need to demean all the variables in your model, including control variables).

Figure 1.9: Relationship between growth and international aid: Within countries variation

# library( dplyr )

data <-

data %>%

group_by( g ) %>%

mutate( mean.x=mean(x), x.demeaned = x - mean.x,

mean.y=mean(y), y.demeaned = y - mean.y ) %>%

ungroup()As a result, we obtain a new dataset where our observations are disposed along a line. In graph 1.10, there are no longer significant differences across the 3 countries - they all lay on the same line. We observe only within countries differences.

palette( c( "steelblue", "darkred", "darkgreen" ) )

palette( adjustcolor( palette(), alpha.f = 0.4 ) )

par( mfrow=c(1,2) )

plot( data$x, data$y, bty="n",

# ylim = c(-5,5), xlim = c(-3,3), bty="n",

pch = 19, cex=4, col = factor(data$g),

ylab = "Economic growth (%)",

xlab = "International aid ($M)",

main="POOLED MODEL")

plot( data$x.demeaned, data$y.demeaned,

ylim = c(-5,5), xlim = c(-3,3), bty="n",

pch = 19, cex=4, col = factor(data$g),

ylab = "Economic growth (%)",

xlab = "International aid ($M)",

main="DEMEANED DATA")

points(2, -4, col = "steelblue", lwd = 5 )

points(2, -4.5, col = "darkred", lwd = 5 )

points(2, -5, col = "darkgreen", lwd = 5 )

text(2.4, -4, "A", col = "steelblue")

text(2.4, -4.5, "B", col = "darkred")

text(2.4, -5, "C", col = "darkgreen")

Figure 1.10: Simulated dataset: De-meaned observations

At this point, we can estimate the relationship between x and y using an OLS model. Our beta coefficient is positive and statistically significant. For each milion dollar received in funding aid, a country’s economic growth increases - on average - of 1.01 points percentage.

Note that we do not know whether a country grows more than another. We are estimating an average within country effect.

Demean_OLS = lm ( y.demeaned ~ x.demeaned, data = data )

stargazer( OLS, Pooled_OLS, Demean_OLS,

type = "html",

dep.var.labels = c("Economic growth",

"Economic growth",

"Economic growth (de-meaned)"),

column.labels = c("Cross-Sectional OLS",

"Pooled OLS", "De-meaned OLS"),

covariate.labels = c("Constant",

"International aid - Year 1",

"International aid",

"International aid - De-meaned OLS"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |||

| Economic growth | Economic growth | Economic growth (de-meaned) | |

| Cross-Sectional OLS | Pooled OLS | De-meaned OLS | |

| (1) | (2) | (3) | |

| Constant | 26.60** | -0.85 | 0.02 |

| (1.26) | (6.02) | (0.10) | |

| International aid - Year 1 | -11.20** | ||

| (0.51) | |||

| International aid | 0.46 | ||

| (1.37) | |||

| International aid - De-meaned OLS | 1.01*** | ||

| (0.07) | |||

| Note: | p<0.1; p<0.05; p<0.01 | ||

1.2.4 Fixed-effect model

The demeaning procedure shows what happens when we use a fixed effect model.

A fixed effect model is an OLS model including a set of dummy variables for each group in your dataset. In our case, we need to include 3 dummy variable - one for each country. The model automatically excludes one to avoid multicollinearity problems.

Results for our policy variable in the fixed effect model are identical to the de-meaned OLS. The international aid coefficient represents a one-unit change in the economic growth, on average, within a country for each additional one-milion dollar received.

The fixed effect model also includes different intercepts for each country. The intercept for country A is represented by the constant. It represents the average economic growth when international aid is equal to 0. Country B intercept is 4.90 points percentage lower than Country A. Country C intercept is 12.8 points percentage lower than Country A. The coefficient of Country B and Country C indicate how much a country is different, on average, from country A (the reference category).

fixed_reg = lm ( y ~ x + as.factor(g), data = data)

stargazer( OLS, Pooled_OLS, Demean_OLS, fixed_reg,

type = "html",

dep.var.labels = c("Economic growth",

"Economic growth",

"Economic growth (de-meaned)"),

column.labels = c("Cross-Sectional OLS",

"Pooled OLS",

"De-mean OLS",

"Fixed effect"),

covariate.labels = c("Constant",

"International aid - Year 1",

"International aid",

"International aid - De-meaned OLS",

"Country B", "Country C"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | ||||

| Economic growth | Economic growth | Economic growth (de-meaned) | y | |

| Cross-Sectional OLS | Pooled OLS | De-mean OLS | Fixed effect | |

| (1) | (2) | (3) | (4) | |

| Constant | 26.60** | -0.85 | 0.02 | 2.75*** |

| (1.26) | (6.02) | (0.10) | (0.37) | |

| International aid - Year 1 | -11.20** | |||

| (0.51) | ||||

| International aid | 0.46 | 1.01*** | ||

| (1.37) | (0.08) | |||

| International aid - De-meaned OLS | 1.01*** | |||

| (0.07) | ||||

| Country B | -4.90*** | |||

| (0.27) | ||||

| Country C | -12.80*** | |||

| (0.28) | ||||

| Note: | p<0.1; p<0.05; p<0.01 | |||

1.3 The statistical model

To summarize key conclusions from our previous example:

- We are looking panel data, where we have multiple observations over time for different entity - e.g., countries’ economic growth over time.

The dataset below is an example of a panel dataset. It contains 35 observations. Data are grouped into 7 groups and for each group we have 5 years of observation.

head(data[13:16], 10) %>% pander()| X | Outcome | G | Y |

|---|---|---|---|

| 35926 | 97252 | 1 | 2010 |

| 39492 | 113394 | 1 | 2011 |

| 30982 | 83030 | 1 | 2012 |

| 23601 | 77981 | 1 | 2013 |

| 23717 | 79985 | 1 | 2014 |

| 49275 | 150319 | 2 | 2010 |

| 52329 | 153087 | 2 | 2011 |

| 24153 | 126583 | 2 | 2012 |

| 20998 | 111553 | 2 | 2013 |

| 16147 | 102580 | 2 | 2014 |

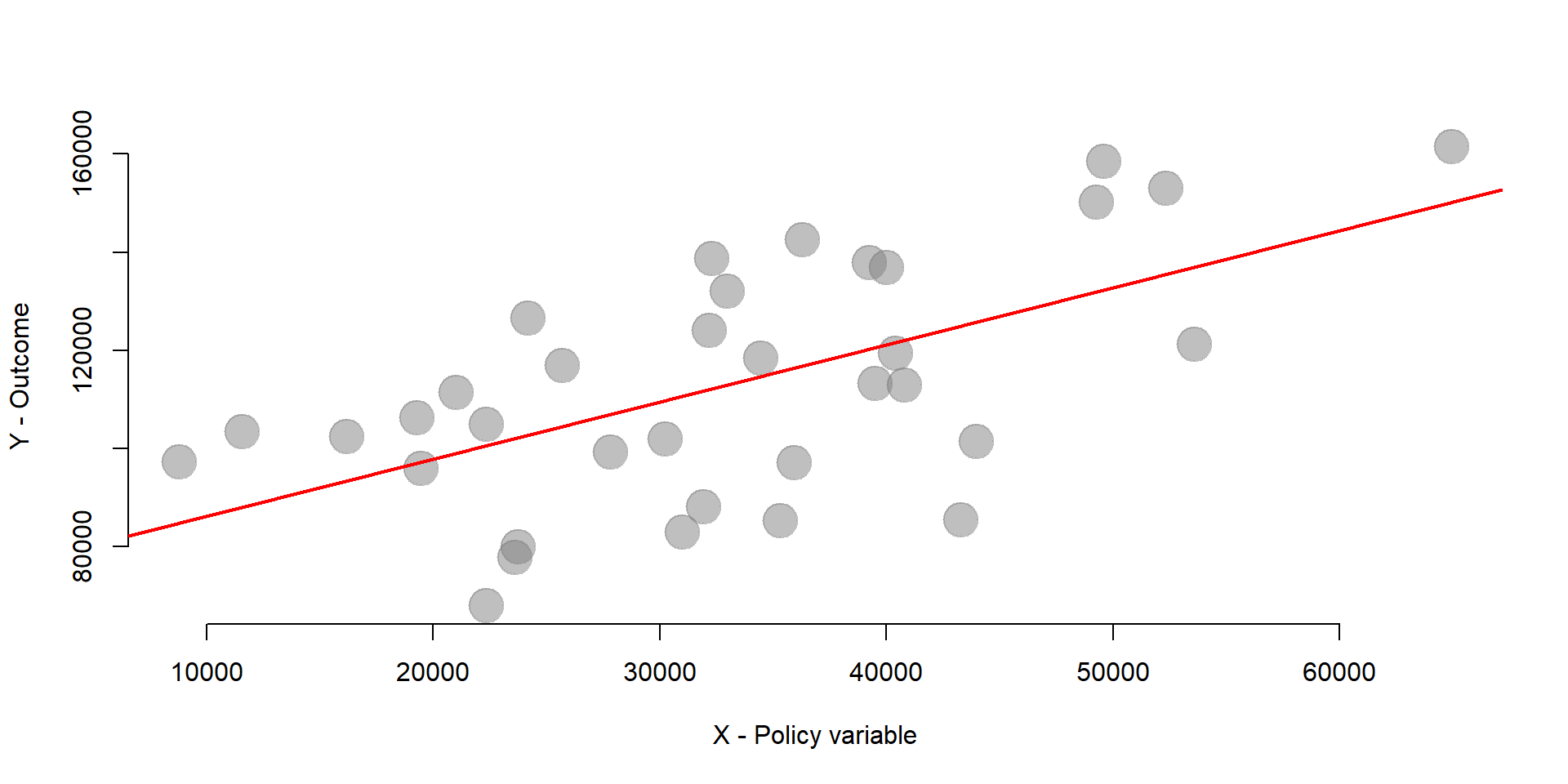

- We are interested in investigating the effect of a policy variable X on a given outcome. But because groups are different across each others (between variation), an OLS model (either a cross-sectional or a pooled model) does not approximate our data very well.

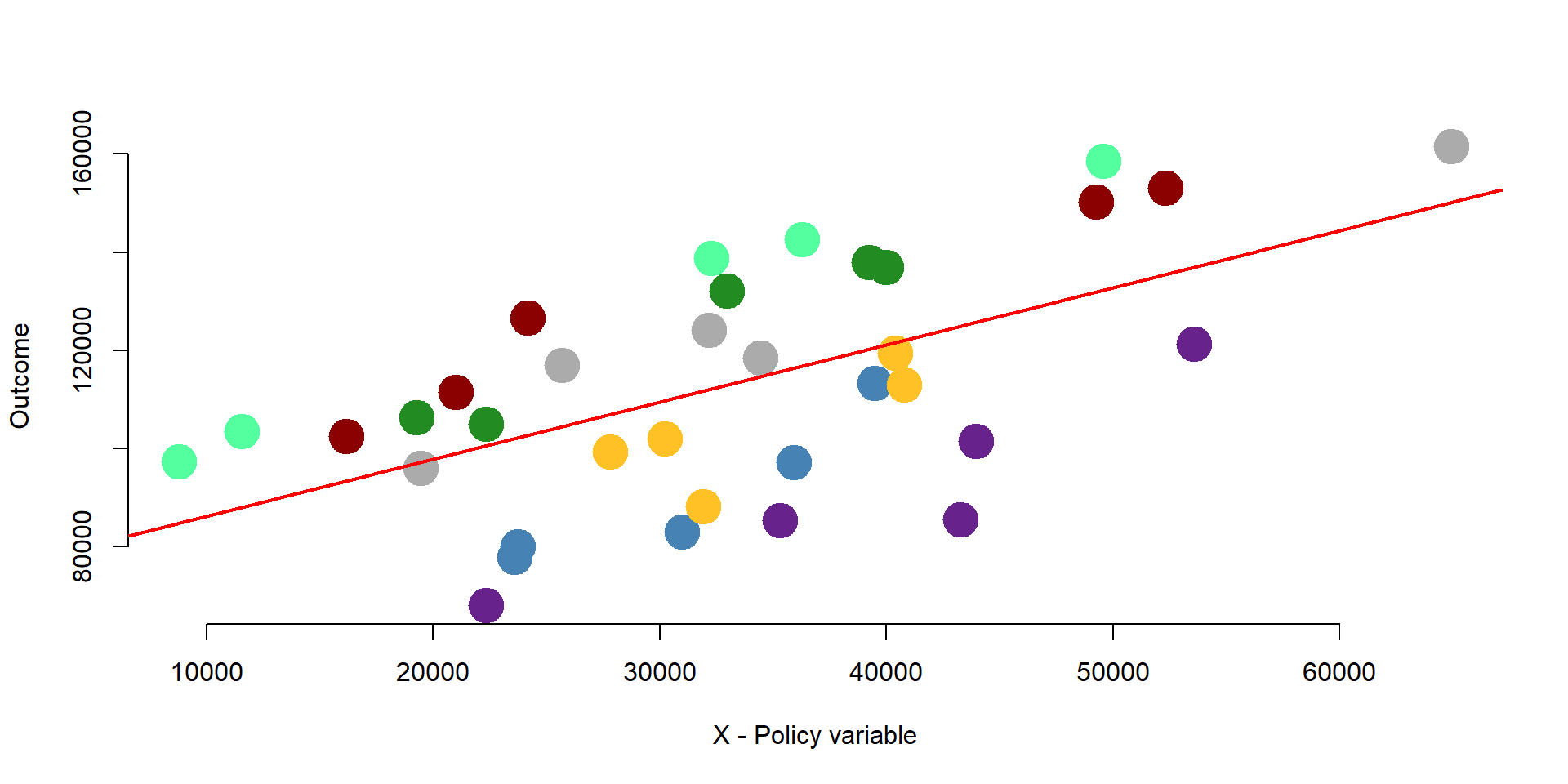

For instance, we can plot the relationship between x and y in our dataset in graph 1.11. The line represent a basic regression where X predicts Y.

plot( data$X, data$Outcome,

col=gray(0.5,0.5),

pch=19, cex=3, bty="n",

xlab="X - Policy variable", ylab="Y - Outcome" )

abline( lm( Outcome ~ X, data=data ), col="red", lwd=2 )

Figure 1.11: Relationship between X and Y

- Because we know that data are grouped into 7 different groups we can use fixed effets to control for between variation and estimate the average within groups effect of x on y.

In other words, we would like to obtain a regression line for each group in our dataset, as one unique regression line is not a good approximation.

palette( c( "steelblue", "darkred", "forestgreen", "goldenrod1", "gray67", "darkorchid4", "seagreen1" )) # Set colors

plot( data$X, data$Outcome,

col=factor(data$G),

pch=19, cex=3, bty="n",

xlab="X - Policy variable", ylab="Outcome" )

abline( lm( Outcome ~ X, data=data ), col="red", lwd=2 )

- A fixed effects includes a set of dummy variables each of which represents a group. The equation of a fixed effect model is the following:

\[\begin{equation} \text{Yit} = \beta_1*Xit + \alpha_i + \text{e} \tag{1.1} \end{equation}\]

Where:

\(\text{i}\) indicates the number of groups in the dataset;

\(\text{t}\) indicates the number of time periods in the dataset;

\(\text{Yit}\) is the outcome variable;

\(\beta_1\) is the coefficient of the policy variable; it indicates the average changes in the outcome over time within each group;

\(\alpha_i\) is a set of dummy variable for each entity;

\(\text{e}\) is the error term.

We can estimate the model as follows:

reg = lm( Outcome ~ X + factor(G) - 1, data = data )

stargazer( reg,

type = "html",

dep.var.labels = (" "),

column.labels = c(" "),

covariate.labels = c("Policy variable", "Group 1", "Group 2", "Group 3", "Group 4", "Group 5", "Group 6", "Group 7"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |

| Policy variable | 1.46*** |

| (0.09) | |

| Group 1 | 45,424.04*** |

| (3,855.35) | |

| Group 2 | 81,237.27*** |

| (3,970.97) | |

| Group 3 | 78,774.94*** |

| (3,856.03) | |

| Group 4 | 54,388.96*** |

| (4,077.09) | |

| Group 5 | 71,810.91*** |

| (4,149.83) | |

| Group 6 | 34,387.68*** |

| (4,445.21) | |

| Group 7 | 87,701.40*** |

| (3,670.89) | |

| Note: | p<0.1; p<0.05; p<0.01 |

# We add factor(G) - 1 in our model to add a set of intercepts; one for each group.

fe <- lm( Outcome ~ X + factor(G) - 1, data = data )

yhat <- fe$fittedWe plot our results in graph 1.12. As you can see, the lines have the same slope, but different intercept. The slope is represented by the coefficient of international aid, the policy variable. It represents the average within-group variation across time. The intercepts represent the average of each group when international aid is equal to 0. A fixed effect model better approximates the actual strucutre of the data and controls for group-level characteristics.

Note that the group-level dummy variables control for all time-invariant characteristics of the group. But they do not control for characteristics that change over time.

plot( data$X, yhat, col=factor(data$G),

pch=19, cex=2, xlab="X", ylab="Y", bty="n" )

Figure 1.12: Fixed effect model

2 A fixed effect model

](FIGURE/FixEffs/labs.jpg)

Figure 2.1: Source: Wikimedia

Countries strongly invest in Research & Development (R&D). The United States spend 2.7% of their GDP into research and development. One of the goals of these investments is to provide funding to companies and incentivize private investments. In this example, we want to assess the impact of public expenditure on research and development (R&D) on companies’ investments.

# Research and Dev Data Example

data <-

structure(list(Company = structure(c(1L, 1L, 1L, 1L, 1L, 2L,

2L, 2L, 2L, 2L, 3L, 3L, 3L, 3L, 3L, 4L, 4L, 4L, 4L, 4L, 5L, 5L,

5L, 5L, 5L, 6L, 6L, 6L, 6L, 6L, 7L, 7L, 7L, 7L, 7L, 8L, 8L, 8L,

8L, 8L, 9L, 9L, 9L, 9L, 9L, 10L, 10L, 10L, 10L, 10L), .Label = c("Company1",

"Company10", "Company2", "Company3", "Company4", "Company5",

"Company6", "Company7", "Company8", "Company9"), class = "factor"),

Year = structure(c(1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L,

1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L,

1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L,

1L, 2L, 3L, 4L, 5L, 1L, 2L, 3L, 4L, 5L), .Label = c("2010",

"2011", "2012", "2013", "2014"), class = "factor"), RDPub = c(36084.6765050791,

39167.0736835245, 25470.5788846145, 38535.3290719112, 49854.0961164269,

34432.7195247533, 47245.1859513487, 33777.5660532294, 32980.9108059019,

18674.6311495257, 51582.5751143172, 49657.5920063913, 21151.7482521862,

44989.3944563987, 27469.6531842028, 49232.2994550922, 33291.5195048303,

41546.8796686058, 22638.4592049686, 31625.9607988133, 31303.50781271,

45974.624780176, 34504.7441273564, 20774.7954185228, 27950.0144375957,

40690.7676159195, 46561.2962668267, 26188.3993809124, 43009.8737838624,

22704.6853492831, 58388.1638517069, 48936.4747616786, 52636.7608395869,

47799.3428502192, 26684.9981230871, 16972.5078799265, 21749.0137860797,

57615.815520373, 42115.9212584994, 24784.2851609194, 24700.4171860131,

43935.0254079564, 45875.8362000498, 34058.1942678181, 34929.4644701799,

43575.4984304363, 12647.1563283971, 23945.2484980536, 26468.5011319786,

47789.1957893751), RD = c(81350.714800065, 94166.5836970621,

78826.8375271711, 113518.1052785, 131910.186554822, 113235.255062069,

144046.776937286, 128988.32612132, 142774.887677862, 110726.882333979,

140466.844220037, 152997.657483535, 121013.721249736, 146230.227928565,

124621.042472361, 141553.47064653, 118274.962010614, 140517.569536745,

129300.130864955, 142068.771051653, 78596.777474216, 106571.998091857,

91542.9150565959, 91568.9285167832, 124649.074154644, 114606.917489605,

118348.580376089, 112382.812865581, 134644.20839165, 113341.657058609,

115421.233970692, 105148.345003555, 94432.2359901331, 124823.732848258,

91339.5836525477, 97820.2935444977, 103924.578594518, 154164.264112627,

161462.972856511, 128987.507909402, 71910.0053382578, 116670.754012959,

105724.09794328, 118671.548890751, 114642.783618629, 117707.976981318,

80315.8777704682, 89917.1704724757, 115655.631519908, 155541.106401779

)), class = "data.frame", row.names = c(NA, -50L))Data are structured in a panel dataset where we have 10 companies and observations over a five-year period. You can see here the structure of the data for Company 1, Company 10, and Company 2.

head(data,15) %>% pander()| Company | Year | RDPub | RD |

|---|---|---|---|

| Company1 | 2010 | 36085 | 81351 |

| Company1 | 2011 | 39167 | 94167 |

| Company1 | 2012 | 25471 | 78827 |

| Company1 | 2013 | 38535 | 113518 |

| Company1 | 2014 | 49854 | 131910 |

| Company10 | 2010 | 34433 | 113235 |

| Company10 | 2011 | 47245 | 144047 |

| Company10 | 2012 | 33778 | 128988 |

| Company10 | 2013 | 32981 | 142775 |

| Company10 | 2014 | 18675 | 110727 |

| Company2 | 2010 | 51583 | 140467 |

| Company2 | 2011 | 49658 | 152998 |

| Company2 | 2012 | 21152 | 121014 |

| Company2 | 2013 | 44989 | 146230 |

| Company2 | 2014 | 27470 | 124621 |

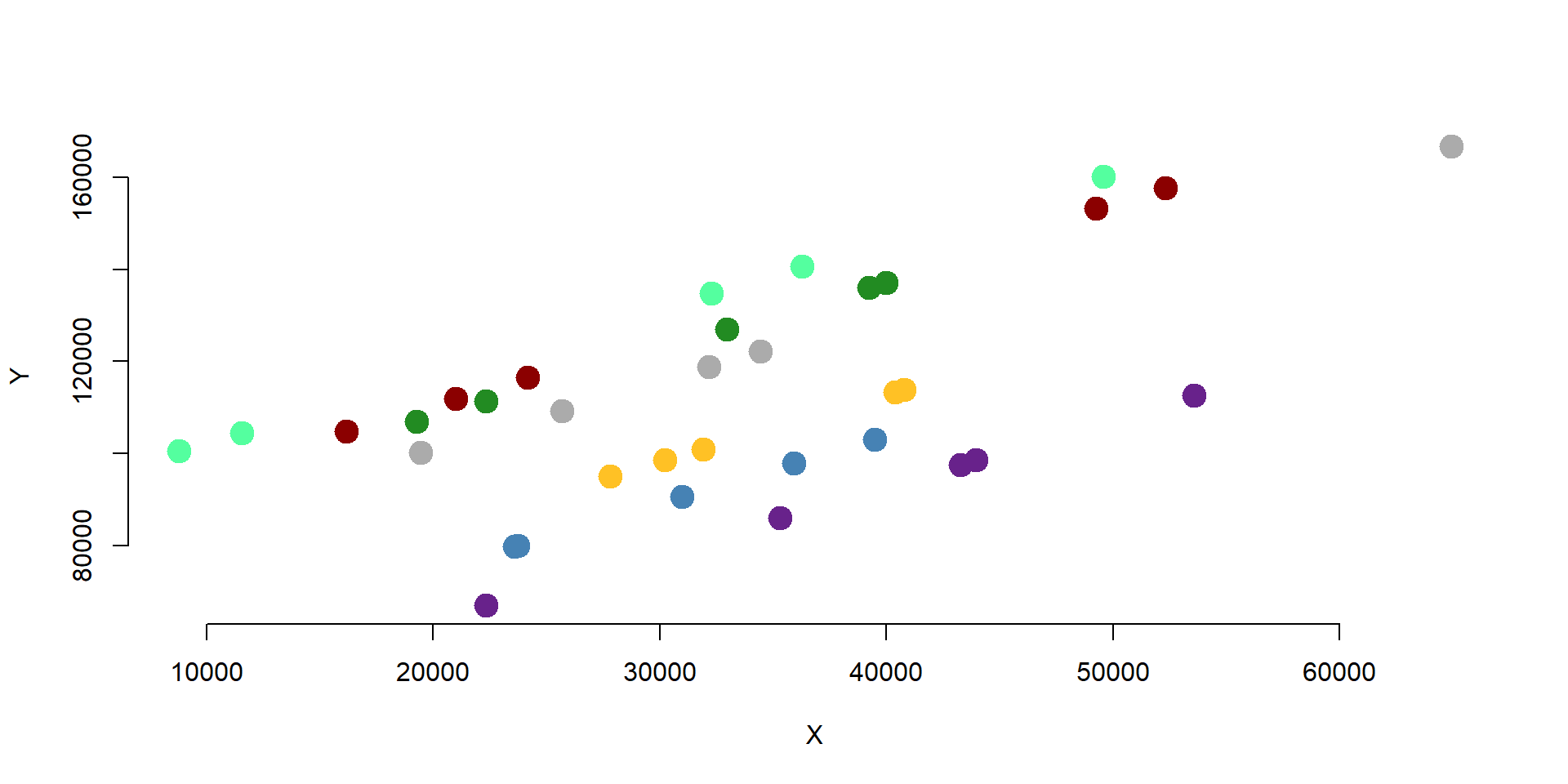

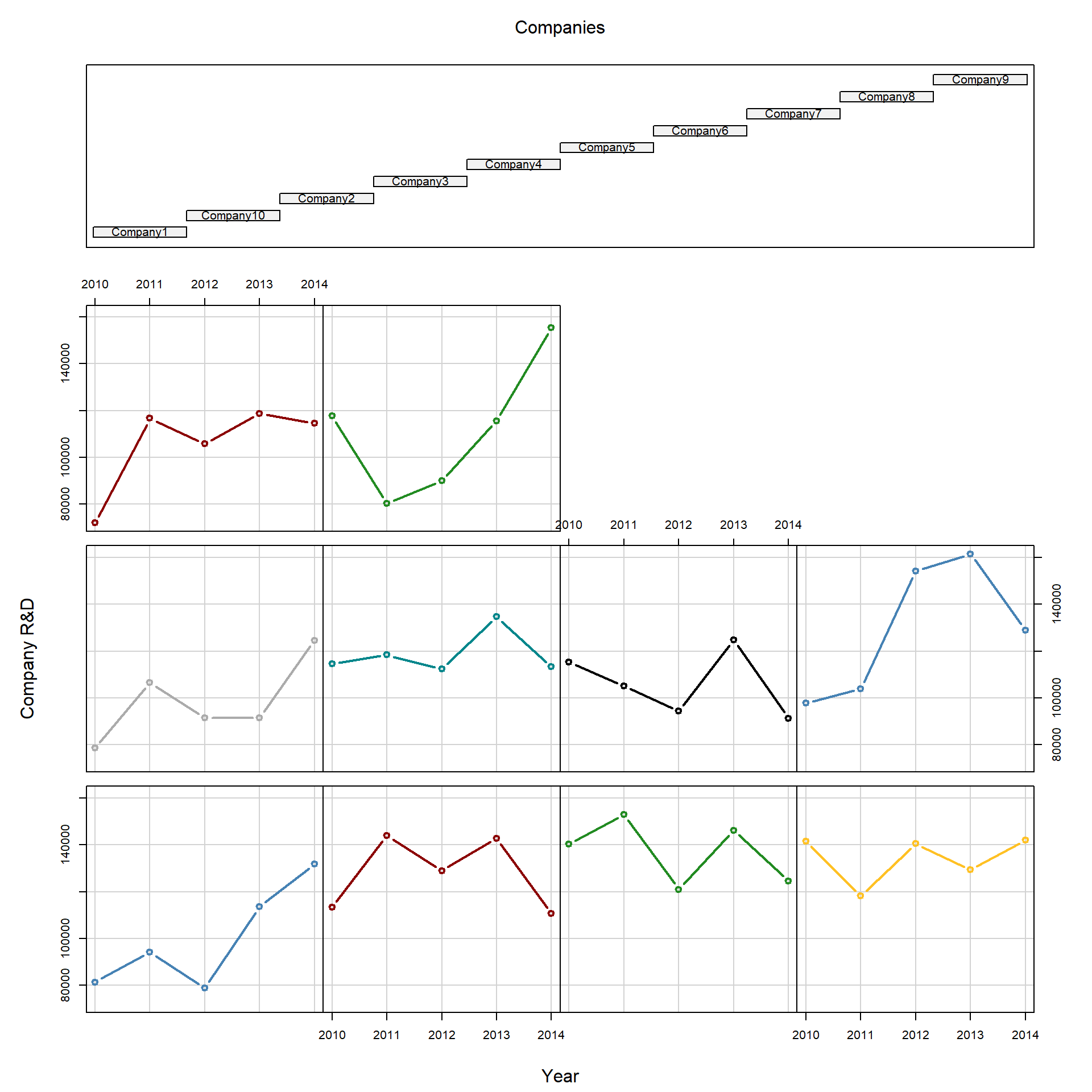

Each company has different levels of investment in research and development. We plot each company average R&D investement in graph 2.2. Each bar represents a company. The middle dot is the average R&D investment. The bars go from the minimum level of investment to the maximum.

You can see how Company 1 has a lower average R&D investment than Company 2. Company 5 has a smaller range than the other companies, with a minimum investment that it is higher than the average outcome of company 6 and so on.

There are can be several explanation for these differences: size, different managerial practices, or business culture, among others. Note that this variable changes across organizations but not across time.

plotmeans(RD ~ Company,

xlab = "Companies", ylab = "R&D Investment",

barwidth = 2,

col =

palette( c( "steelblue", "darkred", "forestgreen", "goldenrod1", "gray67", "turquoise4", "tan1" )),

barcol =

palette( c( "steelblue", "darkred", "forestgreen", "goldenrod1", "gray67", "turquoise4", "tan1" )),

data = data)

Figure 2.2: Heterogeneity across companies

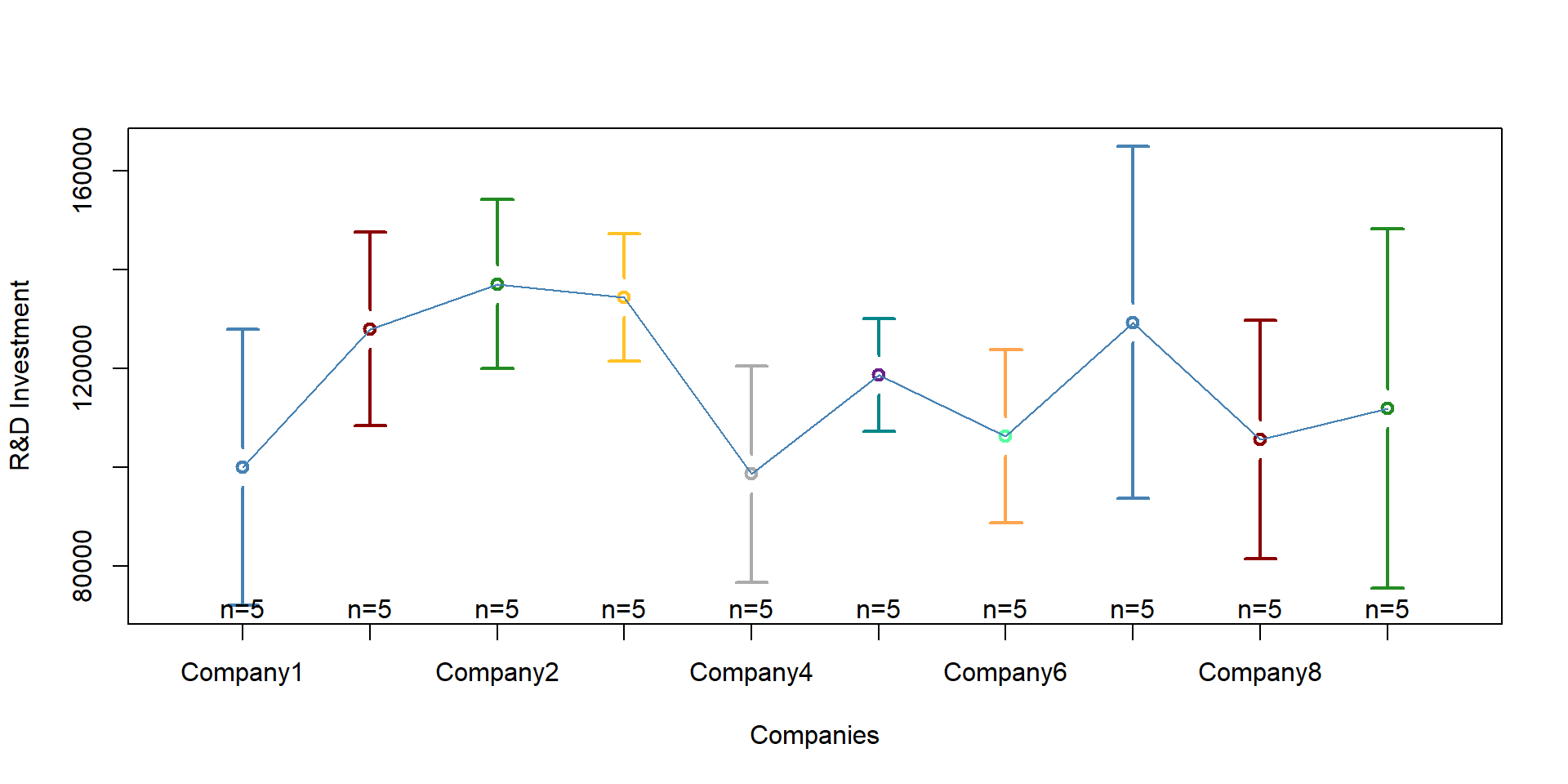

Variation occurs also across years. We can plot each year in graph 2.3. Year 2014 shows great variability than year 2, for instance.

Variation across years can be explained by new national policies that get implemented, internaional agreeements, taation policies, or the economic situation. Note that these varibles vary across years but not across entities (e.g., national policies affect all companies in the dataset).

plotmeans(RD ~ Year,

main="Heterogeineity across companies",

xlab ="Years, 2010 - 2014", ylab = "R&D Investment",

data = data, barwidth = 2,

col = "gray52",

barcol = "gray52")

Figure 2.3: Heterogeneity across years

We can look at both company and year variation at the same time in one graph:

palette( c( "steelblue", "darkred", "forestgreen", "goldenrod1", "gray67", "turquoise4", "black"))

coplot(RD ~ as.factor(Year)|as.factor(Company),

xlab = c("Year", "Companies"), ylab = "Company R&D",

col = as.factor(Company), lwd = 2,

type="b", data=data)

Figure 2.4: Heterogeneity across companies and years

Because of differences across companies, we want to use fixed effects to control for these unobserved characteristics that affect variation across entities (e.g., companies).

2.1 The statistical model

We can start by looking at the results that we would have if we were running the model as an OLS regression.

reg = lm(RD ~ RDPub)

stargazer( reg,

type = "html",

dep.var.labels = ("Company R&D"),

column.labels = c(" "),

covariate.labels = c("Constant", "Public R&D"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |

| Company R&D | |

| Constant | 83,671.23*** |

| (9,276.31) | |

| Public R&D | 0.92*** |

| (0.25) | |

| Note: | p<0.1; p<0.05; p<0.01 |

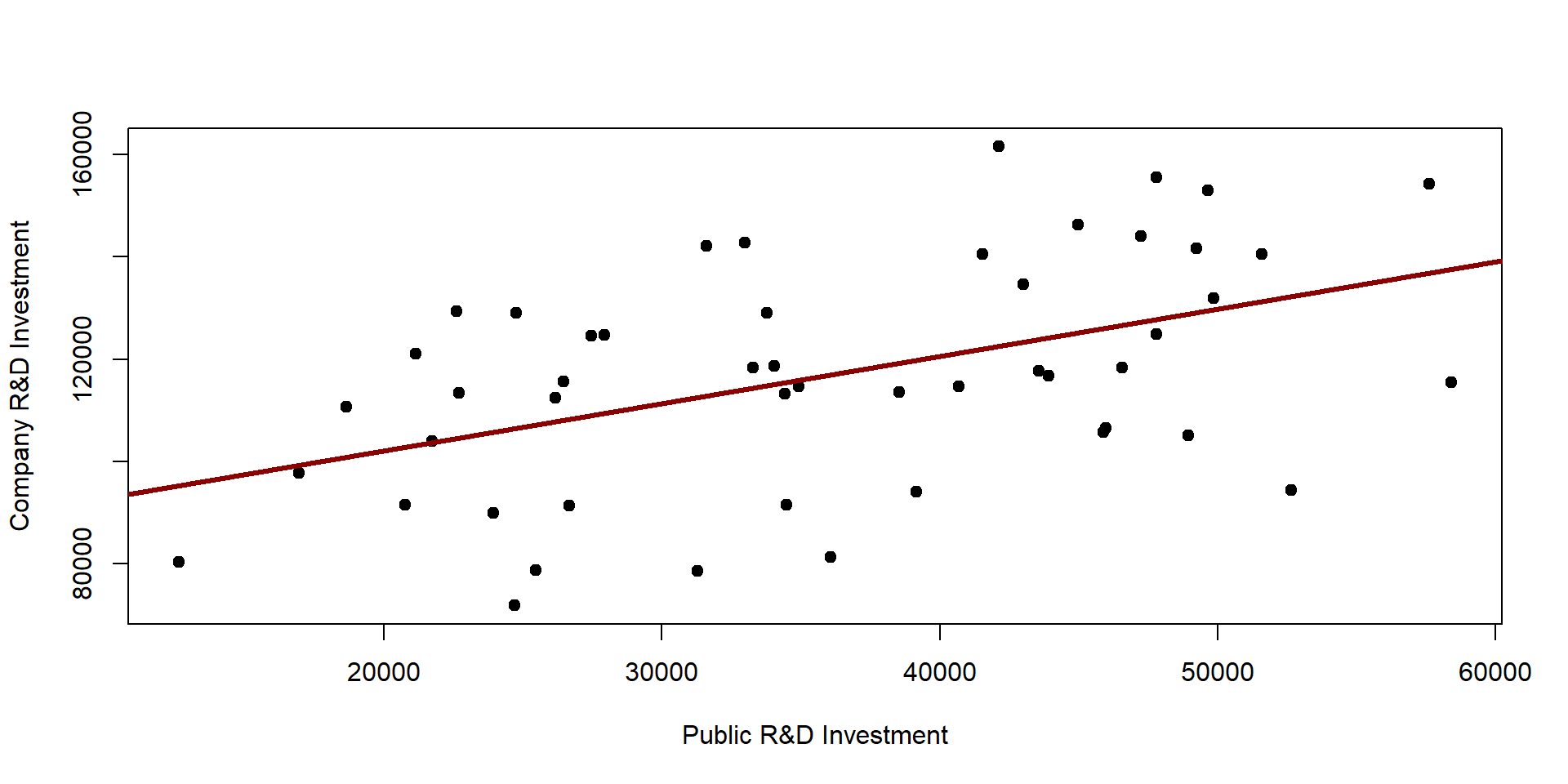

The effect of public R&D investment on private ones is equal to 0.92 , indicating that for each dollar invested by the government, private companies will invest 0.92 dollars.

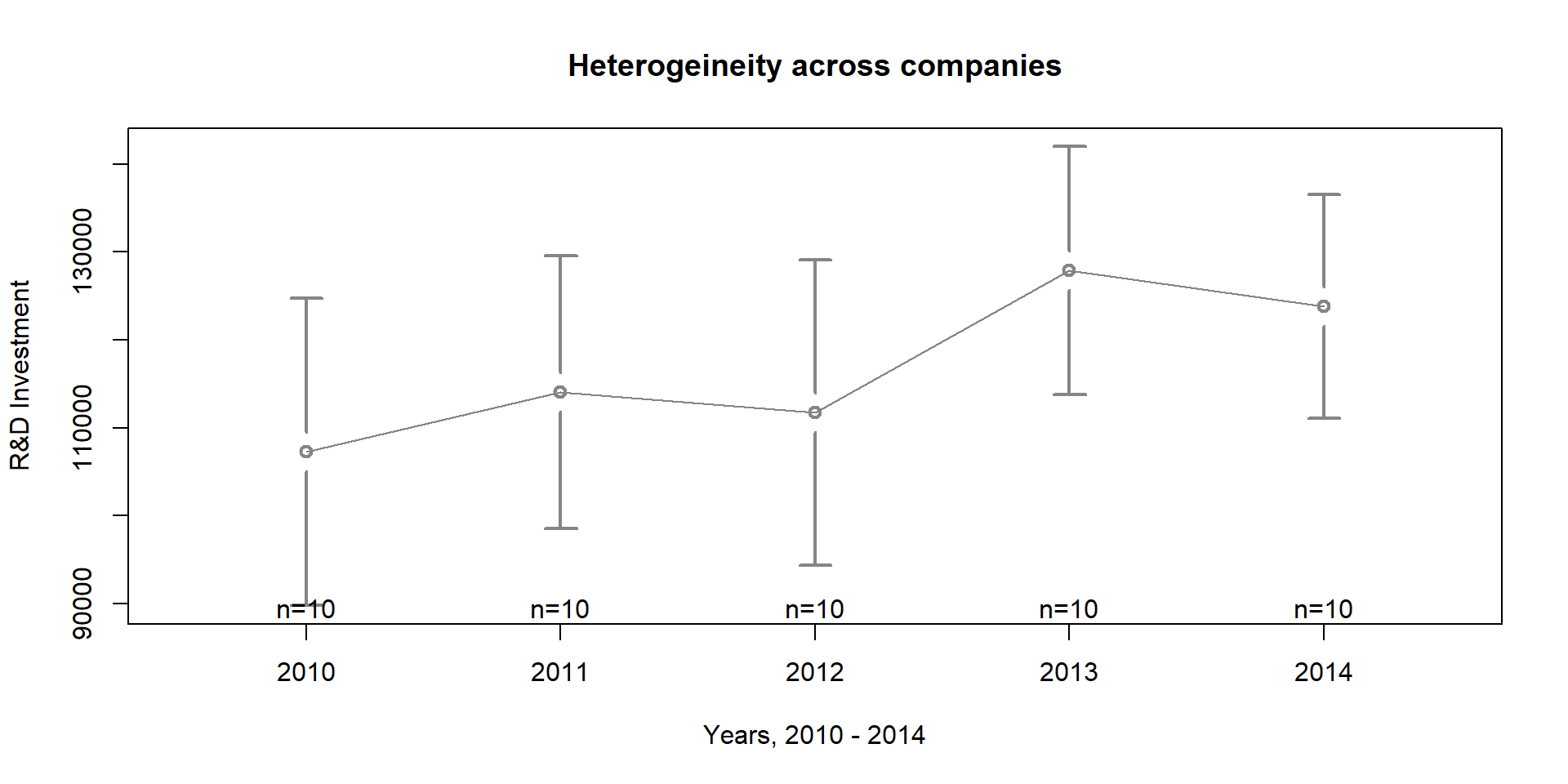

We can plot our observations and the OLS results on graph 2.5. The black dots represent our observations. The red line represents OLS results.

We can see that the line only approximates the actual observations.

plot( RDPub, RD, pch=19,

xlab="Public R&D Investment", ylab="Company R&D Investment")

abline( lm( RD ~ RDPub ),lwd=3, col="darkred" )

Figure 2.5: OLS results

We can improve the results by including a set of dummy variables, one for each company, so as we can control for preliminary differences in R&D spending across companies.

regDummy <- lm ( RD ~ RDPub + as.factor(Company), data = data )

stargazer( regDummy,

type = "html",

dep.var.labels = ("Company R&D"),

column.labels = c(" "),

omit.stat = "all",

covariate.labels = c("Constant (or Company 1)",

"Public R&D", "Company 10",

"Company 2", "Company 3",

"Company 4", "Company 5",

"Company 6", "Company 7",

"Company 8", "Company 9"),

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |

| Company R&D | |

| Constant (or Company 1) | 56,569.91*** |

| (9,411.86) | |

| Public R&D | 1.15*** |

| (0.19) | |

| Company 10 | 33,047.18*** |

| (8,830.99) | |

| Company 2 | 35,794.77*** |

| (8,795.26) | |

| Company 3 | 36,860.79*** |

| (8,801.86) | |

| Company 4 | 5,193.58 |

| (8,857.36) | |

| Company 5 | 20,994.55** |

| (8,800.51) | |

| Company 6 | -4,121.64 |

| (8,954.31) | |

| Company 7 | 35,253.30*** |

| (8,845.63) | |

| Company 8 | 6,857.00 |

| (8,795.14) | |

| Company 9 | 19,830.50** |

| (8,887.64) | |

| Note: | p<0.1; p<0.05; p<0.01 |

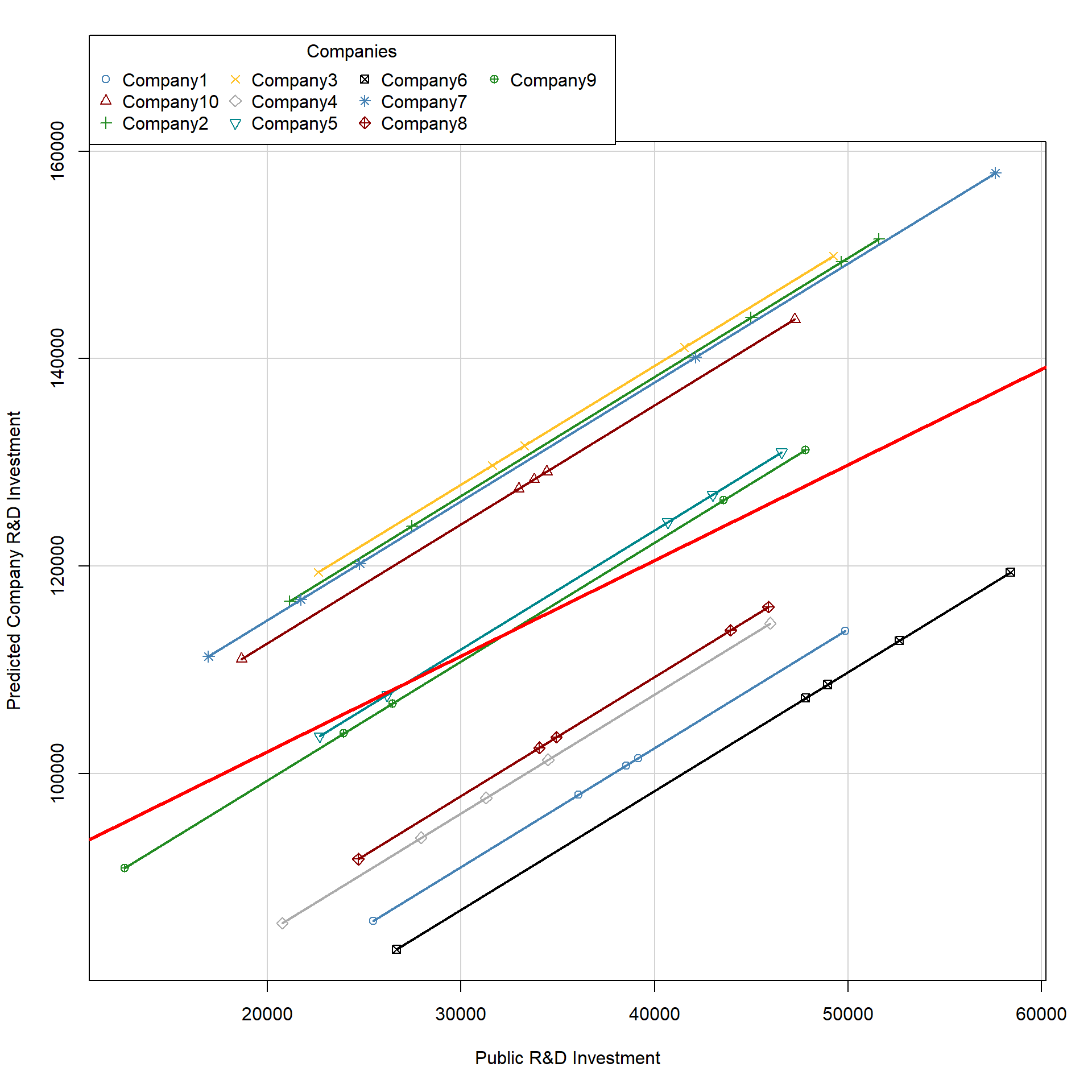

Results of our variable of interest (Public R&D) change; now, for each dollar spent by the public, companies spend 1.15.

We can see how the regression line varies for each company. The dark red, thicker line represent the regression results when dummies are not included.

Note that each line has a different intercept (which is predicted by our dummies) but the same slope - as \(\beta_1\) estimates the average within effect for all groups.

yhat <- regDummy$fitted

colline <- palette( c( "steelblue", "darkred", "forestgreen",

"goldenrod1", "gray67", "turquoise4", "black" ) )

scatterplot( yhat ~ RDPub | as.factor(Company),

boxplots = FALSE,

xlab = "Public R&D Investment",

ylab = "Predicted Company R&D Investment",

smooth = FALSE, col=colline,

lwd = 2, legend=c(title = "Companies"))

abline( lm( RD ~ RDPub ), lwd = 3, col = "red" )

Figure 2.6: Plot of OLS with dummy variables

2.2 plm package

We can use the plm package to estimate the fixed effects model. In the output table, we compare results across the OLS, the OLS with dummies, and the fixed effect model.

## The model is specified as a OLS model,

## but we need to indicate the "index" -

## i.e. the group variable in the model

fixed <- plm( RD ~ RDPub, data=data,

index=c("Company"), model="within" )

stargazer( reg, regDummy, fixed,

type = "html",

dep.var.labels = ("Company R&D"),

column.labels = c("OLS", "OLS with Dummy", "FE"),

covariate.labels = c("Constant (or Company 1)",

"Public R&D", "Company 10",

"Company 2", "Company 3",

"Company 4", "Company 5",

"Company 6", "Company 7",

"Company 8", "Company 9"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |||

| Company R&D | |||

| OLS | panel | ||

| linear | |||

| OLS | OLS with Dummy | FE | |

| (1) | (2) | (3) | |

| Constant (or Company 1) | 83,671.23*** | 56,569.91*** | |

| (9,276.31) | (9,411.86) | ||

| Public R&D | 0.92*** | 1.15*** | 1.15*** |

| (0.25) | (0.19) | (0.19) | |

| Company 10 | 33,047.18*** | ||

| (8,830.99) | |||

| Company 2 | 35,794.77*** | ||

| (8,795.26) | |||

| Company 3 | 36,860.79*** | ||

| (8,801.86) | |||

| Company 4 | 5,193.58 | ||

| (8,857.36) | |||

| Company 5 | 20,994.55** | ||

| (8,800.51) | |||

| Company 6 | -4,121.64 | ||

| (8,954.31) | |||

| Company 7 | 35,253.30*** | ||

| (8,845.63) | |||

| Company 8 | 6,857.00 | ||

| (8,795.14) | |||

| Company 9 | 19,830.50** | ||

| (8,887.64) | |||

| Note: | p<0.1; p<0.05; p<0.01 | ||

As in the model with the dummy variables, the coefficient of the fixed effect model indicates, on average, how much the outcome (Company R&D) changes per country over time for a one unit increases of x (Public R&D).

Note that the fixed effect model estimated using plm does not have an intercept (see column #3 in the above table). When estimating the model with plm, we can retrieve each company’ intercept by using the command \(fixef\). The intercept for company A is the intercept in the dummy model (see column #2 in the above table).

fixef(fixed) ## Company1 Company10 Company2 Company3 Company4 Company5 Company6 Company7

## 56570 89617 92365 93431 61763 77564 52448 91823

## Company8 Company9

## 63427 76400The plm package also allows us to test whether the fixed effect model is a better choice compared to the OLS model with the \(pFtest\) command. If the p-value is lower than 0.05, than the fixed effect model is a better choice.

pFtest(fixed, reg) ##

## F test for individual effects

##

## data: RD ~ RDPub

## F = 6.4244, df1 = 9, df2 = 39, p-value = 1.516e-05

## alternative hypothesis: significant effects2.3 Key notes on fixed effects

Before moving to random effects, we need to point out some important features that you need to remember about fixed effect models:

- Using a fixed effect model allows us to control for all time - invariant characteristics of an entity (even characteristics that you cannot measure or observe); we assume that some characteristics of the entity we are investigating (e.g., the country or the company) affect our predictor and we need to control for it. Note that a fixed effect model control for all time invariant characterists, thus reducing omit variable bias issues.

- BUT you cannot investigate time-invariant characteristics using fixed effects. For instance, in the previous example, we cannot investigate the effect of a company location. As the location does not change over time, the fixed effects “absorb” this variation. Statistically, time-invariant characteristics are perfectly collinear with the dummy, so as their effect cannot be estimated in the model (you can see a practical example in section 3)

- Because of this, you need to keep in mind that fixed effects are designed to investigate time-variant characteristics within an entity.

- Finally, fixed effects assume that each entity is unique, such that the error terms and each entity’s constant are not correlated with other entities’ error terms and constant. In other words, there is independence across entities. This condition can be tested using a randome effect model and an Hausman test (see section 4).

3 Replication of a study

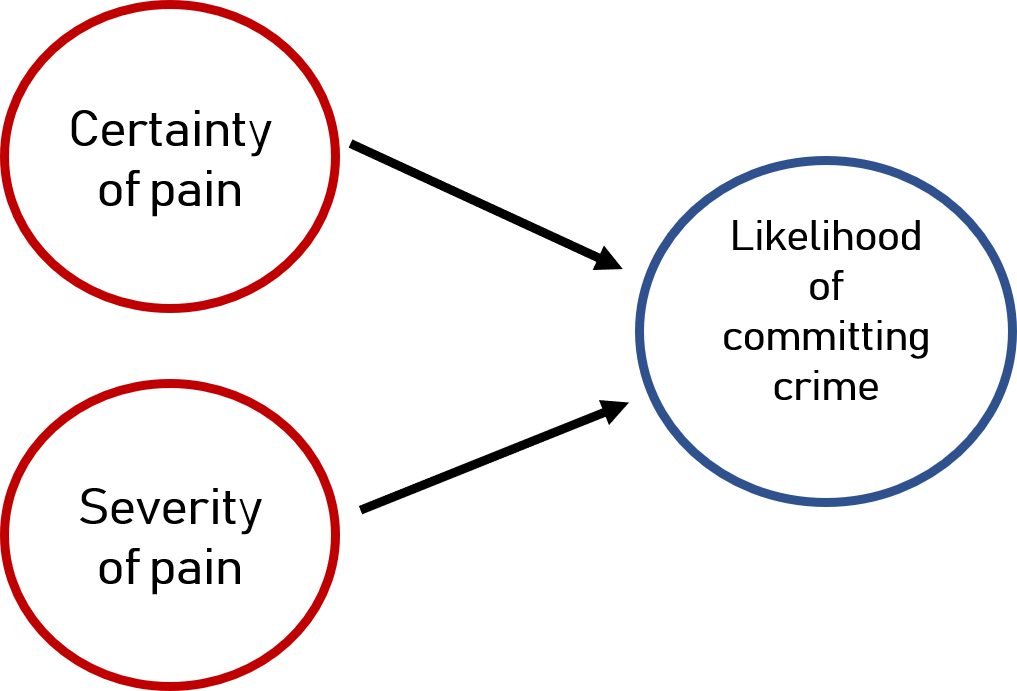

Figure 3.1: Deterrence Theory

As replication study we are going to look at research on deterrence theory. Deterrence theory argues that individuals refrain from engaging in deviant activities if they know they will be punished and if they know the pain will be severe, as illustrated in figure 3.1. This theory is often applied to justify more severe penalties ( such as the death penalty ) and explain why crime rates have decreased in recent years. Of course, there are mixed opinions and evidence.

We will use a panel dataset of 90 observational units (counties) from 1981 to 1987 collected by Cornwell and Trumbull in 1994 (you can find the full paper here ). Data look at whether the certainty of (1) being arrested, (2) being convicted, or (3) being sentencing to prison and (4) the severity of the pain decrease crimes within a given county.

Research question: Do certainty and severity of pain lead to lower crime?

Hypothesis: Based on deterrence theory, we argue that greater certainty or severity of pain will decrease crime in a given county.

3.1 Data

We start by uploading the data Crime from the plm package,

# library( plm )

data( Crime )

dataCrime <- CrimeYou can see te structure of the dataset and the main independent variables in the following table. Note that for each county (1 and 3 in this case), we have data from 1981 to 1987.

head( dataCrime[,1:7], 10 ) %>% pander()| county | year | crmrte | prbarr | prbconv | prbpris | avgsen |

|---|---|---|---|---|---|---|

| 1 | 81 | 0.03988 | 0.2897 | 0.4021 | 0.4722 | 5.61 |

| 1 | 82 | 0.03834 | 0.3381 | 0.433 | 0.507 | 5.59 |

| 1 | 83 | 0.0303 | 0.3304 | 0.5257 | 0.4797 | 5.8 |

| 1 | 84 | 0.03473 | 0.3625 | 0.6047 | 0.5201 | 6.89 |

| 1 | 85 | 0.03657 | 0.3254 | 0.5787 | 0.4971 | 6.55 |

| 1 | 86 | 0.03475 | 0.3261 | 0.5123 | 0.4399 | 6.9 |

| 1 | 87 | 0.0356 | 0.2983 | 0.5276 | 0.4362 | 6.71 |

| 3 | 81 | 0.01639 | 0.2029 | 0.869 | 0.4658 | 8.45 |

| 3 | 82 | 0.01907 | 0.1622 | 0.7722 | 0.377 | 5.71 |

| 3 | 83 | 0.01515 | 0.1816 | 1.028 | 0.4384 | 8.69 |

These are the main independent variables that we will be investigating:

| Variable | Description |

|---|---|

| Crime | FBI index crimes to county population |

| prbarr | Ratio of offenses to arrests (probability of arrest) |

| prbconv | Ratio of convinction to arrests (probability of conviction) |

| prbpris | Ratio of prison sentences to convinctions (probability of prison sentence) |

| avgsen | Average number of days for prison sentence (severity of the pain) |

Here are some basic statistics.

stargazer( dataCrime,

type = "html",

keep = c("^crmrte", "^prbarr", "^prbconv", "^prbpris", "^avgsen"),

omit.summary.stat = c("p25", "p75"),

digits = 2 )| Statistic | N | Mean | St. Dev. | Min | Max |

| crmrte | 630 | 0.03 | 0.02 | 0.002 | 0.16 |

| prbarr | 630 | 0.31 | 0.17 | 0.06 | 2.75 |

| prbconv | 630 | 0.69 | 1.69 | 0.07 | 37.00 |

| prbpris | 630 | 0.43 | 0.09 | 0.15 | 0.68 |

| avgsen | 630 | 8.95 | 2.66 | 4.22 | 25.83 |

The average crime rate across all counties and years is 3.84%. The average probability of arrest is 30.74%. The average probability of convinction is 68.86%. The average probability of prison sentence is 42.55%. The average length of prison stay is 8.955 days.

These are the control variables that we will also include in the model:

| Variable | Description |

|---|---|

| wcon | Average wage in constuction |

| wtuc | Average wage in transportation, Utilities and Communication |

| wtrd | Average wage in retail trade |

| wfir | Average wage in finance, insurance, real estate |

| wser | Average wage in service industry |

| wmfg | Average wage in manufacturing |

| wfed | Average wage in federal government |

| wsta | Average wage in state government |

| wloc | Average wage in local government |

| smsa | Counties included in a metropolitan area |

| density | population density |

| region | County location: West, central or other |

| polpc | Police per capita |

| pctymle | Percentage male between 15 and 24 |

| pctmin | Percentage minority |

3.2 Analysis

We will estimate two models. The first model is an OLS model. The second model will include fixed effects to control for variation across years and counties.

# crime as a function of punishment severity

model.ols <- lm( crmrte ~ prbarr + prbconv + prbpris + avgsen +

# geographic controls

polpc + density + region + smsa +

# demographic controls

pctmin + pctymle +

# economic controls

wcon + wtuc + wtrd + wfir + wser + wmfg +

wfed + wsta + wloc,

data = dataCrime)

model.fe <- plm( crmrte ~ prbarr + prbconv + prbpris + avgsen +

# geographic controls

polpc + density + region + smsa +

# demographic controls

pctmin + pctymle +

# economic controls

wcon + wtuc + wtrd + wfir + wser + wmfg +

wfed + wsta + wloc,

index=c("county"),

data = dataCrime)

stargazer( model.ols, model.fe,

type = "html",

dep.var.labels = ("Crime rates"),

column.labels = c("OLS", "Fixed effects"),

intercept.bottom = FALSE,

omit.stat = "all",

digits = 2 )| Dependent variable: | ||

| Crime rates | ||

| OLS | panel | |

| linear | ||

| OLS | Fixed effects | |

| (1) | (2) | |

| Constant | 0.01*** | |

| (0.005) | ||

| prbarr | -0.03*** | -0.01*** |

| (0.003) | (0.002) | |

| prbconv | -0.002*** | -0.001*** |

| (0.0003) | (0.0002) | |

| prbpris | -0.0000 | -0.001 |

| (0.005) | (0.004) | |

| avgsen | -0.0000 | 0.0002* |

| (0.0001) | (0.0001) | |

| polpc | 2.59*** | 2.15*** |

| (0.17) | (0.16) | |

| density | 0.01*** | 0.01* |

| (0.001) | (0.005) | |

| regionwest | -0.01*** | |

| (0.002) | ||

| regioncentral | -0.01*** | |

| (0.001) | ||

| smsayes | -0.002 | |

| (0.003) | ||

| pctmin | 0.0001*** | |

| (0.0000) | ||

| pctymle | 0.08*** | -0.21 |

| (0.02) | (0.15) | |

| wcon | -0.0000 | -0.0000 |

| (0.0000) | (0.0000) | |

| wtuc | -0.0000 | -0.0000 |

| (0.0000) | (0.0000) | |

| wtrd | 0.0000 | -0.0000 |

| (0.0000) | (0.0000) | |

| wfir | -0.0000 | -0.0000 |

| (0.0000) | (0.0000) | |

| wser | -0.0000 | 0.0000 |

| (0.0000) | (0.0000) | |

| wmfg | 0.0000 | -0.0000 |

| (0.0000) | (0.0000) | |

| wfed | 0.0000*** | -0.0001*** |

| (0.0000) | (0.0000) | |

| wsta | -0.0000* | 0.0000 |

| (0.0000) | (0.0000) | |

| wloc | 0.0000 | 0.0000** |

| (0.0000) | (0.0000) | |

| Note: | p<0.1; p<0.05; p<0.01 | |

The basic take-away is that our interpretation of the main policy variables will be extremely biased unless we are accounting for baseline levels of crime in communities.

We see that as the probability of getting arrested (prbarr) and getting convicted (prbconv) increase crime rates are falling, but the pooled OLS model is grossly over-stating their impact by a factor of two or three. One possible explanation is that communities with a lower baseline level of crime have more resources to pursue arrests and convictions. After controlling for baseline levels of both we see that marginal increases in arrests or convinctions don’t actually serve as a big deterrent (not as much as the first model suggests).

The convinction rate is not significant in either model. But oddly the length of prison sentences is actually positively related to crime. Here we need to use some caution because we know that time-variant omitted variables can still cause bias. It would be odd that people not planning to commit crimes would decide to when they learn prison sentences are longer. It is more likely that communities that were increasing sentences were also experiencing increases in crime rates for other reasons. There is a lot to unpack there, but even without a good theory we can see that there is little evidence to support the idea that getting tough on crime will yield large dividends for public safety, at least not at the levels initially expected using under-specified cross-sectional models.

Note differences across the two models:

- The fixed effect model has no intercept. We can retrieve the intercept for each county using the command \(fixef\).

fixef( model.fe ) ## 1 3 5 7 9 11 13 15

## 0.060084 0.046161 0.043979 0.056184 0.039635 0.048502 0.066940 0.053980

## 17 19 21 23 25 27 33 35

## 0.055863 0.052418 0.056969 0.058080 0.050766 0.063337 0.047980 0.064051

## 37 39 41 45 47 49 51 53

## 0.055701 0.043151 0.055641 0.059780 0.063772 0.077619 0.084570 0.046359

## 55 57 59 61 63 65 67 69

## 0.080100 0.053936 0.046895 0.055413 0.072888 0.091768 0.037022 0.057987

## 71 77 79 81 83 85 87 89

## 0.060076 0.075831 0.043475 0.059776 0.063261 0.074490 0.063176 0.055283

## 91 93 97 99 101 105 107 109

## 0.065907 0.070107 0.065432 0.060630 0.063768 0.077222 0.075993 0.045315

## 111 113 115 117 119 123 125 127

## 0.042160 0.042240 0.021003 0.050713 0.066490 0.060926 0.057370 0.072944

## 129 131 133 135 137 139 141 143

## 0.066800 0.055086 0.099563 0.088889 0.040846 0.064359 0.071413 0.052689

## 145 147 149 151 153 155 157 159

## 0.060631 0.090529 0.044583 0.056223 0.062659 0.063944 0.056190 0.056542

## 161 163 165 167 169 171 173 175

## 0.052268 0.053094 0.075751 0.052740 0.043195 0.050645 0.035903 0.045847

## 179 181 183 185 187 189 191 193

## 0.057790 0.087292 0.068353 0.033402 0.062412 0.068649 0.055389 0.055670

## 195 197

## 0.070040 0.041247The variables that are time-invariant such as region type, location in a metropolitan area, or minority percentage are removed from the fixed-effect model.

We can compare OLS and fixed effect results using the \(pFtest\) command. Remember if the p-value < 0.05, the fixed effect model is a better choice than the OLS model.

pFtest( model.fe, model.ols ) ##

## F test for individual effects

##

## data: crmrte ~ prbarr + prbconv + prbpris + avgsen + polpc + density + ...

## F = 12.181, df1 = 85, df2 = 524, p-value < 2.2e-16

## alternative hypothesis: significant effects4 Random effects

As mentioned in section 2.3, fixed effects assume that there is no correlation across entities’ error term and constants - i.e., that each entity is independent from others.

This assumption can be tested by using a random effects model, where we assume that differences across entities influence our dependent variable, and there is correlation across entities’ error terms and constants.

We use our previous example about R&D investment to illustrate a random effect model. The model can be estimated using the \(plm\) function and specifying \(model = "random"\).

random <- plm( RD ~ RDPub, data=data, index=c("Company", "Year"), model="random" )

stargazer( random,

type = "html",

dep.var.labels = ("Company R&D"),

column.labels = c(""),

covariate.labels = c("Constant", "Public R&D"),

omit.stat = "all",

digits = 2, intercept.bottom = FALSE )| Dependent variable: | |

| Company R&D | |

| Constant | 77,087.49*** |

| (8,281.97) | |

| Public R&D | 1.10*** |

| (0.19) | |

| Note: | p<0.1; p<0.05; p<0.01 |

Interpretation of the coefficients is tricky since they include both the within-entity and between-entity effects. It represents the average effect of X over Y, when X changes across time and between countries by one unit.

There are tests that you can run to chose between fixed and random effects. For instance, the Hausmann test compare a fixed and a random effect model. If the p-value is < 0.05 then fixed effects is a better choice.

phtest( fixed, random )##

## Hausman Test

##

## data: RD ~ RDPub

## chisq = 5.2567, df = 1, p-value = 0.02186

## alternative hypothesis: one model is inconsistent4.1 Notes on random vs fixed effects

- Random effects allows you to include time-invariant characteristics of your entities because it assumes that the error terms of the entity are not correlated with the predictor.

- Random effects is affected by the omitted variable bias problem. Since we are not including dummy variables that account for all time-invariant characteristics of an entity, we need to carefully specify our control variables.

- The choice between random and fixed effects is not random! You need to perform the Hausman test to decide which model is a better fit. Fixed effects is generally a better model (Berry, 2011)

5 Additional resources

Some examples and graphs discussed in this lecture are inspired by “Panel Data Analysis Fixed and Random Effects using Stata” designed by Oscar Torres-Reyna (2007).

Another good presentation of fixed effects and research designed is Christopher Berry (2011).